Keepalived无法绑定VIP故障排查经历

Keepalived无法绑定VIP故障排查经历

[日期:2015-03-14] 来源:Linux社区作者:john88wang [字体:大中小]

一故障描述

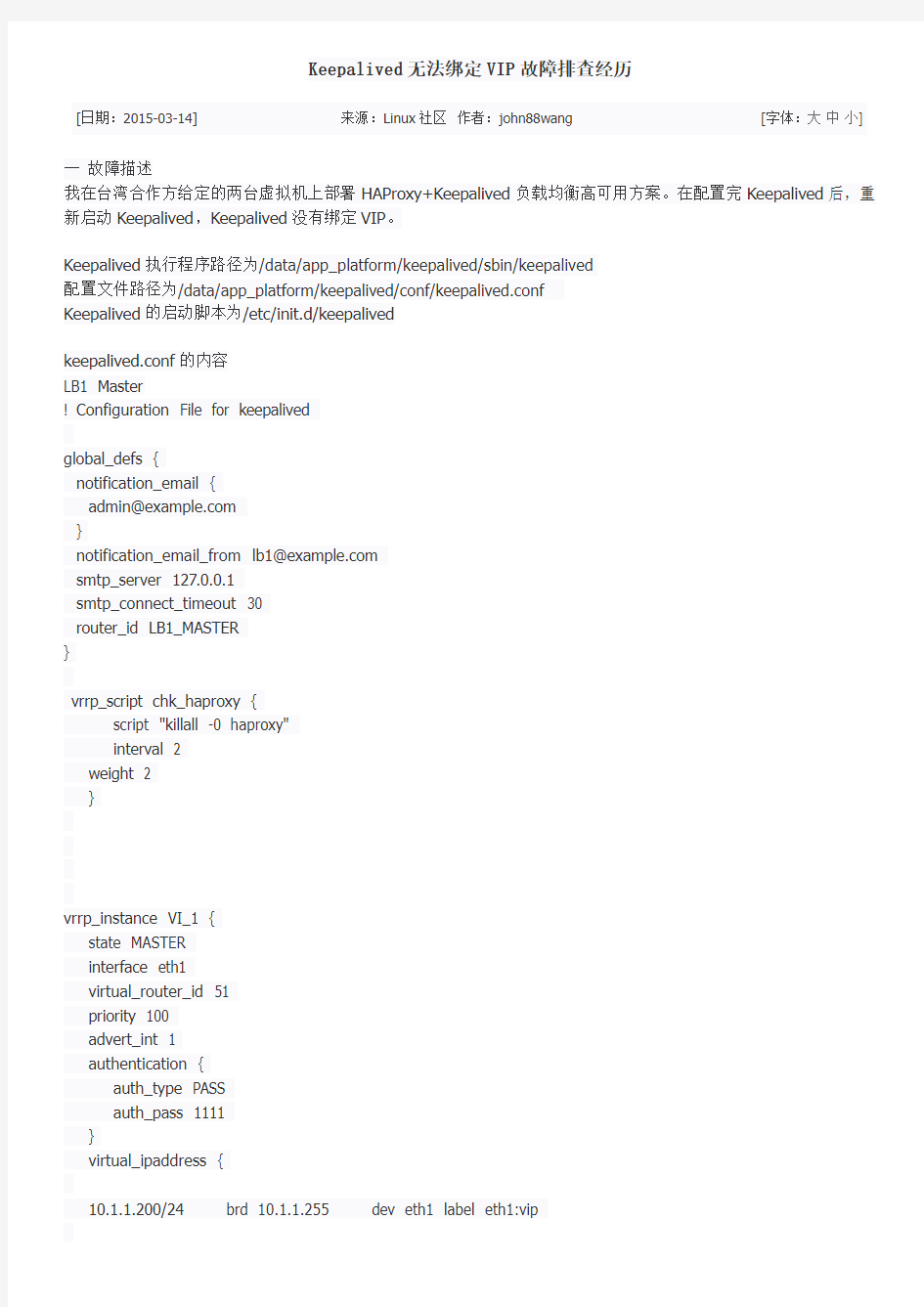

我在台湾合作方给定的两台虚拟机上部署HAProxy+Keepalived负载均衡高可用方案。在配置完Keepalived后,重新启动Keepalived,Keepalived没有绑定VIP。

Keepalived执行程序路径为/data/app_platform/keepalived/sbin/keepalived

配置文件路径为/data/app_platform/keepalived/conf/keepalived.conf

Keepalived的启动脚本为/etc/init.d/keepalived

keepalived.conf的内容

LB1 Master

! Configuration File for keepalived

global_defs {

notification_email {

admin@https://www.sodocs.net/doc/c210887482.html,

}

notification_email_from lb1@https://www.sodocs.net/doc/c210887482.html,

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LB1_MASTER

}

vrrp_script chk_haproxy {

script "killall -0 haproxy"

interval 2

weight 2

}

vrrp_instance VI_1 {

state MASTER

interface eth1

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.1.1.200/24 brd 10.1.1.255 dev eth1 label eth1:vip

}

track_script {

chk_haproxy

}

}

重新启动Keepalived查看日志

Mar 3 18:09:00 cv00300005248-1 Keepalived[20138]: Stopping Keepalived v1.2.15 (02/28,2015)

Mar 3 18:09:00 cv00300005248-1 Keepalived[20259]: Starting Keepalived v1.2.15 (02/28,2015)

Mar 3 18:09:00 cv00300005248-1 Keepalived[20260]: Starting Healthcheck child process, pid=20261

Mar 3 18:09:00 cv00300005248-1 Keepalived[20260]: Starting VRRP child process, pid=20262

Mar 3 18:09:00 cv00300005248-1 Keepalived_vrrp[20262]: Registering Kernel netlink reflector

Mar 3 18:09:00 cv00300005248-1 Keepalived_vrrp[20262]: Registering Kernel netlink command channel Mar 3 18:09:00 cv00300005248-1 Keepalived_vrrp[20262]: Registering gratuitous ARP shared channel Mar 3 18:09:00 cv00300005248-1 Keepalived_healthcheckers[20261]: Registering Kernel netlink reflector Mar 3 18:09:00 cv00300005248-1 Keepalived_healthcheckers[20261]: Registering Kernel netlink command c hannel

Mar 3 18:09:00 cv00300005248-1 Keepalived_healthcheckers[20261]: Configuration is using : 3924 Bytes Mar 3 18:09:00 cv00300005248-1 Keepalived_healthcheckers[20261]: Using LinkWatch kernel netlink reflect or...

Mar 3 18:09:00 cv00300005248-1 Keepalived_vrrp[20262]: Configuration is using : 55712 Bytes

Mar 3 18:09:00 cv00300005248-1 Keepalived_vrrp[20262]: Using LinkWatch kernel netlink reflector...

Mar 3 18:09:18 cv00300005248-1 kernel: __ratelimit: 1964 callbacks suppressed

Mar 3 18:09:18 cv00300005248-1 kernel: Neighbour table overflow.

Mar 3 18:09:18 cv00300005248-1 kernel: Neighbour table overflow.

Mar 3 18:09:18 cv00300005248-1 kernel: Neighbour table overflow.

Mar 3 18:09:18 cv00300005248-1 kernel: Neighbour table overflow.

Mar 3 18:09:18 cv00300005248-1 kernel: Neighbour table overflow.

Mar 3 18:09:18 cv00300005248-1 kernel: Neighbour table overflow.

Mar 3 18:09:18 cv00300005248-1 kernel: Neighbour table overflow.

Mar 3 18:09:18 cv00300005248-1 kernel: Neighbour table overflow.

Mar 3 18:09:18 cv00300005248-1 kernel: Neighbour table overflow.

Mar 3 18:09:18 cv00300005248-1 kernel: Neighbour table overflow.

查看VIP绑定情况

$ ifconfig eth1:vip

eth1:vip Link encap:Ethernet HWaddr 00:16:3E:F2:37:6B

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

Interrupt:13

没有VIP绑定

二排查过程

1)检查VIP的配置情况

向合作方确认提供的VIP的详细情况

IPADDR 10.1.1.200

NETMASK 255.255.255.0

GATEWAY 10.1.1.1

Brodcast 10.1.1.255

这里设置的是

10.1.1.200/24 brd 10.1.1.255 dev eth1 label eth1:vip

2)检查iptables和selinux的设置情况

$ sudo service iptables stop

$ sudo setenforce 0

setenforce: SELinux is disabled

如果非要开启iptables的话,需要作些设定

iptables -I INPUT -i eth1 -d 224.0.0.0/8 -j ACCEPT

service iptables save

keepalived使用224.0.0.18作为Master和Backup健康检查的通信IP

3)检查相关的内核参数

HAProxy+Keepalived架构需要注意的内核参数有:

# Controls IP packet forwarding

net.ipv4.ip_forward = 1

开启IP转发功能

net.ipv4.ip_nonlocal_bind = 1

开启允许绑定非本机的IP

如果使用LVS的DR或者TUN模式结合Keepalived需要在后端真实服务器上特别设置两个arp相关的参数。这里也设置好。

net.ipv4.conf.lo.arp_ignore = 1

net.ipv4.conf.lo.arp_announce = 2

net.ipv4.conf.all.arp_ignore = 1

net.ipv4.conf.all.arp_announce = 2

4)检查VRRP的设置情况

LB1 Master

state MASTER

interface eth1

virtual_router_id 51

priority 100

LB2 Backup

state BACKUP

interface eth1

virtual_router_id 51

priority 99

Master和Backup的virtual_router_id需要一样,priority需要不一样,数字越大,优先级越高

5)怀疑是编译安装Keepalived版本出现了问题

重新下载并编译2.1.13的版本,并重新启动keepalived,VIP仍然没有被绑定。

线上有个平台的keepalived是通过yum安装的,于是打算先用yum安装keepalived后将配置文件复制过去看看是否可以绑定VIP

rpm -ivh https://www.sodocs.net/doc/c210887482.html,/pub/epel/6/x86_64/epel-release-6-8.noarch.rpm

yum -y install keepalived

cp /data/app_platform/keepalived/conf/keepalived.conf /etc/keepalived/keepalived.conf

重新启动keepalived

然后查看日志

Mar 4 16:42:46 xxxxx Keepalived_healthcheckers[17332]: Registering Kernel netlink reflector

Mar 4 16:42:46 xxxxx Keepalived_healthcheckers[17332]: Registering Kernel netlink command channel Mar 4 16:42:46 xxxxx Keepalived_vrrp[17333]: Opening file '/etc/keepalived/keepalived.conf'.

Mar 4 16:42:46 xxxxx Keepalived_vrrp[17333]: Configuration is using : 65250 Bytes

Mar 4 16:42:46 xxxxx Keepalived_vrrp[17333]: Using LinkWatch kernel netlink reflector...

Mar 4 16:42:46 xxxxx Keepalived_vrrp[17333]: VRRP sockpool: [ifindex(3), proto(112), unicast(0), fd(10,1 1)]

Mar 4 16:42:46 xxxxx Keepalived_healthcheckers[17332]: Opening file '/etc/keepalived/keepalived.conf'. Mar 4 16:42:46 xxxxx Keepalived_healthcheckers[17332]: Configuration is using : 7557 Bytes

Mar 4 16:42:46 xxxxx Keepalived_healthcheckers[17332]: Using LinkWatch kernel netlink reflector...

Mar 4 16:42:46 xxxxx Keepalived_vrrp[17333]: VRRP_Script(chk_haproxy) succeeded

Mar 4 16:42:47 xxxxx Keepalived_vrrp[17333]: VRRP_Instance(VI_1) Transition to MASTER STATE

Mar 4 16:42:48 xxxxx Keepalived_vrrp[17333]: VRRP_Instance(VI_1) Entering MASTER STATE

Mar 4 16:42:48 xxxxx Keepalived_vrrp[17333]: VRRP_Instance(VI_1) setting protocol VIPs.

Mar 4 16:42:48 xxxxx Keepalived_vrrp[17333]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth1 for 1 0.1.1.200

Mar 4 16:42:48 xxxxx Keepalived_healthcheckers[17332]: Netlink reflector reports IP 10.1.1.200 added Mar 4 16:42:53 xxxxx Keepalived_vrrp[17333]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth1 for 1 0.1.1.200

再查看IP绑定情况

$ ifconfig eth1:vip

eth1:vip Link encap:Ethernet HWaddr 00:16:3E:F2:37:6B

inet addr:10.1.1.200 Bcast:10.1.1.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

Interrupt:13

再通过yum将keepalived卸载掉

yum remove keepalived

恢复到原来的启动脚本/etc/init.d/keepalived

重新启动keepalived后还是无法绑定VIP

怀疑是keepalived启动脚本/etc/init.d/keepalived的问题

检查/etc/init.d/keepalived

# Source function library.

. /etc/rc.d/init.d/functions

exec="/data/app_platform/keepalived/sbin/keepalived"

prog="keepalived"

config="/data/app_platform/keepalived/conf/keepalived.conf"

[ -e /etc/sysconfig/$prog ] && . /etc/sysconfig/$prog

lockfile=/var/lock/subsys/keepalived

start() {

[ -x $exec ] || exit 5

[ -e $config ] || exit 6

echo -n $"Starting $prog: "

daemon $exec $KEEPALIVED_OPTIONS

retval=$?

echo

[ $retval -eq 0 ] && touch $lockfile

return $retval

}

关键是这一行

daemon $exec $KEEPALIVED_OPTIONS

由于没有复制/etc/sysconfig/keepalived,所以将直接执行damon /data/app_platform/keepalived/sbin/keepalived 由于keepalived默认使用的是/etc/keepalived/keepalived.conf作为配置文件,而这里指定了不同的配置文件,所以要修改成为

daemon $exec -D -f $config

重新启动keepalived,查看日志和VIP绑定情况

$ ifconfig eth1:vip

eth1:vip Link encap:Ethernet HWaddr 00:16:3E:F2:37:6B

inet addr:10.1.1.200 Bcast:10.1.1.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

Interrupt:13

6)将LB2 Backup的keepalived启动脚本也修改一下,观察VIP接管情况

查看LB1 Master

$ ifconfig eth1:vip

eth1:vip Link encap:Ethernet HWaddr 00:16:3E:F2:37:6B

inet addr:10.1.1.200 Bcast:10.1.1.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

Interrupt:13

查看LB2 Backup

$ ifconfig eth1:vip

eth1:vip Link encap:Ethernet HWaddr 00:16:3E:F2:37:6B

inet addr:10.1.1.200 Bcast:10.1.1.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

Interrupt:13

问题出现了,LB1 Master和LB2 Backup都绑定了VIP 10.1.1.200,这是不正常的!!!!

在LB1和LB2上登录10.1.1.200看看

[lb1 ~]$ ssh 10.1.1.200

Last login: Wed Mar 4 17:31:33 2015 from 10.1.1.200

[lb1 ~]$

[lb2 ~]$ ssh 10.1.1.200

Last login: Wed Mar 4 17:54:57 2015 from 101.95.153.246

[b2 ~]$

在LB1上停掉keepalived,ping下10.1.1.200这个IP,发现无法ping通

在LB2上停掉keepalived,ping下10.1.1.200这个IP,发现也无法ping通

然后开启LB1上的keepalived,LB1上可以ping通10.1.1.200,LB2上不行

开启LB2上的keepalived,LB2上可以ping通10.1.1.200

由此得出,LB1和LB2各自都将VIP 10.1.1.200绑定到本机的eth1网卡上。两台主机并没有VRRP通信,没有VR RP的优先级比较。

7)排查影响VRRP通信的原因

重新启动LB1 Master的Keepalived查看日志

Mar 5 15:45:36 gintama-taiwan-lb1 Keepalived_vrrp[32303]: Configuration is using : 65410 Bytes

Mar 5 15:45:36 gintama-taiwan-lb1 Keepalived_vrrp[32303]: Using LinkWatch kernel netlink reflector... Mar 5 15:45:36 gintama-taiwan-lb1 Keepalived_vrrp[32303]: VRRP sockpool: [ifindex(3), proto(112), unicast (0), fd(10,11)]

Mar 5 15:45:36 gintama-taiwan-lb1 Keepalived_vrrp[32303]: VRRP_Script(chk_haproxy) succeeded

Mar 5 15:45:37 gintama-taiwan-lb1 Keepalived_vrrp[32303]: VRRP_Instance(VI_1) Transition to MASTER ST ATE

Mar 5 15:45:38 gintama-taiwan-lb1 Keepalived_vrrp[32303]: VRRP_Instance(VI_1) Entering MASTER STATE Mar 5 15:45:38 gintama-taiwan-lb1 Keepalived_vrrp[32303]: VRRP_Instance(VI_1) setting protocol VIPs. Mar 5 15:45:38 gintama-taiwan-lb1 Keepalived_vrrp[32303]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth1 for 10.1.1.200

Mar 5 15:45:38 gintama-taiwan-lb1 Keepalived_healthcheckers[32302]: Netlink reflector reports IP 10.1.1.20 0 added

Mar 5 15:45:43 gintama-taiwan-lb1 Keepalived_vrrp[32303]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth1 for 10.1.1.200

发现LB1 Master上的Keepalived直接进入Master状态,然后接管VIP

再重新启动LB2 Backup上的Keepalived,查看日志

Mar 5 15:47:42 gintama-taiwan-lb2 Keepalived_vrrp[30619]: Configuration is using : 65408 Bytes

Mar 5 15:47:42 gintama-taiwan-lb2 Keepalived_vrrp[30619]: Using LinkWatch kernel netlink reflector... Mar 5 15:47:42 gintama-taiwan-lb2 Keepalived_vrrp[30619]: VRRP_Instance(VI_1) Entering BACKUP STATE Mar 5 15:47:42 gintama-taiwan-lb2 Keepalived_vrrp[30619]: VRRP sockpool: [ifindex(3), proto(112), unicast (0), fd(10,11)]

Mar 5 15:47:46 gintama-taiwan-lb2 Keepalived_vrrp[30619]: VRRP_Instance(VI_1) Transition to MASTER ST ATE

Mar 5 15:47:47 gintama-taiwan-lb2 Keepalived_vrrp[30619]: VRRP_Instance(VI_1) Entering MASTER STATE Mar 5 15:47:47 gintama-taiwan-lb2 Keepalived_vrrp[30619]: VRRP_Instance(VI_1) setting protocol VIPs. Mar 5 15:47:47 gintama-taiwan-lb2 Keepalived_vrrp[30619]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth1 for 10.1.1.200

Mar 5 15:47:47 gintama-taiwan-lb2 Keepalived_healthcheckers[30618]: Netlink reflector reports IP 10.1.1.20 0 added

Mar 5 15:47:52 gintama-taiwan-lb2 Keepalived_vrrp[30619]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth1 for 10.1.1.200

可以看到LB2上的Keepalived先进入BACKUP状态,然后又转为MASTER状态,然后接管VIP

这样就说明VRRP组播有问题。

既然VRRP组播有问题,就尝试使用单播发送VRRP报文。修改LB1和LB2的配置

LB1

添加以下配置

unicast_src_ip 10.1.1.12

unicast_peer {

10.1.1.17

}

LB2

添加以下配置

unicast_src_ip 10.1.1.17

unicast_peer {

10.1.1.12

}

unicast_src_ip 表示发送VRRP单播报文使用的源IP地址

unicast_peer 表示对端接收VRRP单播报文的IP地址

然后各自重新加载keepalived,观察日志

LB1

Mar 5 16:13:35 gintama-taiwan-lb1 Keepalived_vrrp[2551]: VRRP_Instance(VI_1) setting protocol VIPs. Mar 5 16:13:35 gintama-taiwan-lb1 Keepalived_vrrp[2551]: VRRP_Script(chk_haproxy) considered successful on reload

Mar 5 16:13:35 gintama-taiwan-lb1 Keepalived_vrrp[2551]: Configuration is using : 65579 Bytes

Mar 5 16:13:35 gintama-taiwan-lb1 Keepalived_vrrp[2551]: Using LinkWatch kernel netlink reflector...

Mar 5 16:13:35 gintama-taiwan-lb1 Keepalived_vrrp[2551]: VRRP sockpool: [ifindex(3), proto(112), unicast (1), fd(10,11)]

Mar 5 16:13:36 gintama-taiwan-lb1 Keepalived_vrrp[2551]: VRRP_Instance(VI_1) Transition to MASTER STA TE

Mar 5 16:13:48 gintama-taiwan-lb1 Keepalived_vrrp[2551]: VRRP_Instance(VI_1) Received lower prio advert, forcing new election

Mar 5 16:13:48 gintama-taiwan-lb1 Keepalived_vrrp[2551]: VRRP_Instance(VI_1) Sending gratuitous ARPs o n eth1 for 10.1.1.200

Mar 5 16:13:48 gintama-taiwan-lb1 Keepalived_vrrp[2551]: VRRP_Instance(VI_1) Received lower prio advert, forcing new election

Mar 5 16:13:48 gintama-taiwan-lb1 Keepalived_vrrp[2551]: VRRP_Instance(VI_1) Sending gratuitous ARPs o n eth1 for 10.1.1.200

LB2

Mar 5 16:13:48 gintama-taiwan-lb2 Keepalived_vrrp[453]: VRRP_Instance(VI_1) Received higher prio adver t

Mar 5 16:13:48 gintama-taiwan-lb2 Keepalived_vrrp[453]: VRRP_Instance(VI_1) Entering BACKUP STATE Mar 5 16:13:48 gintama-taiwan-lb2 Keepalived_vrrp[453]: VRRP_Instance(VI_1) removing protocol VIPs. Mar 5 16:13:48 gintama-taiwan-lb2 Keepalived_healthcheckers[452]: Netlink reflector reports IP 10.1.1.200 removed

查看VIP绑定情况,发现LB2上的VIP已经移除

在LB1上LB2上执行ping 10.1.1.200这个VIP

[lb1 ~]$ ping -c 5 10.1.1.200

PING 10.1.1.200 (10.1.1.200) 56(84) bytes of data.

64 bytes from 10.1.1.200: icmp_seq=1 ttl=64 time=0.028 ms

64 bytes from 10.1.1.200: icmp_seq=2 ttl=64 time=0.020 ms

64 bytes from 10.1.1.200: icmp_seq=3 ttl=64 time=0.020 ms

64 bytes from 10.1.1.200: icmp_seq=4 ttl=64 time=0.021 ms

64 bytes from 10.1.1.200: icmp_seq=5 ttl=64 time=0.027 ms

--- 10.1.1.200 ping statistics ---

5 packets transmitted, 5 received, 0% packet loss, time 3999ms

rtt min/avg/max/mdev = 0.020/0.023/0.028/0.004 ms

[lb2 ~]$ ping -c 5 10.1.1.200

PING 10.1.1.200 (10.1.1.200) 56(84) bytes of data.

--- 10.1.1.200 ping statistics ---

5 packets transmitted, 0 received, 100% packet loss, time 14000ms

当LB1接管VIP的时候LB2居然无法ping通VIP,同样将LB1的Keepalived停掉,LB2可以接管VIP,但是在LB 1上无法ping通这个VIP

在LB1和LB2上进行抓包

lb1 ~]$ sudo tcpdump -vvv -i eth1 host 10.1.1.17

tcpdump: listening on eth1, link-type EN10MB (Ethernet), capture size 65535 bytes

16:46:04.827357 IP (tos 0xc0, ttl 255, id 328, offset 0, flags [none], proto VRRP (112), length 40)

10.1.1.12 > 10.1.1.17: VRRPv2, Advertisement, vrid 51, prio 102, authtype simple, intvl 1s, length 20, a ddrs: 10.1.1.200 auth "1111^@^@^@^@"

16:46:05.827459 IP (tos 0xc0, ttl 255, id 329, offset 0, flags [none], proto VRRP (112), length 40)

10.1.1.12 > 10.1.1.17: VRRPv2, Advertisement, vrid 51, prio 102, authtype simple, intvl 1s, length 20, a ddrs: 10.1.1.200 auth "1111^@^@^@^@"

16:46:06.828234 IP (tos 0xc0, ttl 255, id 330, offset 0, flags [none], proto VRRP (112), length 40)

10.1.1.12 > 10.1.1.17: VRRPv2, Advertisement, vrid 51, prio 102, authtype simple, intvl 1s, length 20, a ddrs: 10.1.1.200 auth "1111^@^@^@^@"

16:46:07.828338 IP (tos 0xc0, ttl 255, id 331, offset 0, flags [none], proto VRRP (112), length 40)

10.1.1.12 > 10.1.1.17: VRRPv2, Advertisement, vrid 51, prio 102, authtype simple, intvl 1s, length 20, a ddrs: 10.1.1.200 auth "1111^@^@^@^@"

lb2 ~]$ sudo tcpdump -vvv -i eth1 host 10.1.1.12

tcpdump: listening on eth1, link-type EN10MB (Ethernet), capture size 65535 bytes

16:48:07.000029 IP (tos 0xc0, ttl 255, id 450, offset 0, flags [none], proto VRRP (112), length 40)

10.1.1.12 > 10.1.1.17: VRRPv2, Advertisement, vrid 51, prio 102, authtype simple, intvl 1s, length 20, a ddrs: 10.1.1.200 auth "1111^@^@^@^@"

16:48:07.999539 IP (tos 0xc0, ttl 255, id 451, offset 0, flags [none], proto VRRP (112), length 40)

10.1.1.12 > 10.1.1.17: VRRPv2, Advertisement, vrid 51, prio 102, authtype simple, intvl 1s, length 20, a ddrs: 10.1.1.200 auth "1111^@^@^@^@"

16:48:08.999252 IP (tos 0xc0, ttl 255, id 452, offset 0, flags [none], proto VRRP (112), length 40)

10.1.1.12 > 10.1.1.17: VRRPv2, Advertisement, vrid 51, prio 102, authtype simple, intvl 1s, length 20, a ddrs: 10.1.1.200 auth "1111^@^@^@^@"

16:48:09.999560 IP (tos 0xc0, ttl 255, id 453, offset 0, flags [none], proto VRRP (112), length 40)

10.1.1.12 > 10.1.1.17: VRRPv2, Advertisement, vrid 51, prio 102, authtype simple, intvl 1s, length 20, a ddrs: 10.1.1.200 auth "1111^@^@^@^@"

在LB1和LB2所在物理机上的其他虚拟机进行VIP ping测试,同样只能是LB1上绑定的VIP只能是LB1所在的物理机上的虚拟机可以ping通,LB2所在的物理机上的虚拟机无法ping通,反之也是一样

有同行建议说VRRP和DHCP也有关系,经过查看对方提供的VM的IP地址居然是DHCP分配的,但是经过测试,VRRP和DHCP没有关系。线上环境最好不要使用DHCP来获取IP地址。

8)请对方技术人员配合检查VIP无法ping通的问题

最终查明对方的内网居然使用的虚拟网络,网关是没有实际作用的。所以部分虚拟机无法通过10.1.1.1这个网关去访问VIP。

让对方虚拟机提供方的技术人员到服务器调试HAProxy+Keepalived,他们通过网络设置使得10.1.1.200这个VIP 可以通过内网访问。但是当我测试时,发现当HAProxy挂掉后,Keepalived无法作VIP的切换。

9)解决当HAProxy挂掉后,Keepalived无法对VIP切换的问题。

经过反复测试,发现当Keepalived挂掉后,VIP可以切换。但是当HAProxy挂掉后,VIP无法切换。

仔细检查配置文件和查阅相关资料,最终确定是Keepalived的weight和priority两个参数的大小设置问题。

原来的配置文件中我设置LB1的weight为2,priority为100。LB2的weight为2,priority为99

对方在调试的时候将LB1的priority更改为160.这样反复测试当LB1的HAProxy挂掉后,VIP都无法迁移到LB2上。将LB1上的priority更改为100就可以了。

这里需要注意的是:

主keepalived的priority值与vrrp_script的weight值相减的数字小于备用keepalived的priority 值即可!

vrrp_script 里的script返回值为0时认为检测成功,其它值都会当成检测失败

* weight 为正时,脚本检测成功时此weight会加到priority上,检测失败时不加。

主失败:

主priority < 从priority + weight 时会切换。

主成功:

主priority + weight > 从priority + weight 时,主依然为主

* weight 为负时,脚本检测成功时此weight不影响priority,检测失败时priority - abs(weight)

主失败:

主priority - abs(weight) < 从priority 时会切换主从

主成功:

主priority > 从priority 主依然为主。

最终的配置文件为:

! Configuration File for keepalived

global_defs {

notification_email {

admin@https://www.sodocs.net/doc/c210887482.html,

}

notification_email_from lb1@https://www.sodocs.net/doc/c210887482.html,

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LB1_MASTER

}

vrrp_script chk_haproxy {

script "killall -0 haproxy"

interval 2

weight 2

}

#设置外网的VIP

vrrp_instance eth0_VIP {

state MASTER

interface eth0

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

unicast_src_ip 8.8.8.6 #使用VRRP的单播

unicast_peer {

8.8.8.7

}

virtual_ipaddress {

8.8.8.8/25 brd 8.8.8.255 dev eth0 label eth0:vip

}

track_script {

chk_haproxy

}

}

#设置内网的VIP

vrrp_instance eth1_VIP {

state MASTER

interface eth1

virtual_router_id 52

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

unicast_src_ip 10.1.1.12

unicast_peer {

10.1.1.17

}

virtual_ipaddress {

10.1.1.200/24 brd 10.1.1.255 dev eth1 label eth1:vip

}

track_script {

chk_haproxy

}

}

三排查总结

在配置Keepalived的时候,需要注意以下几点:

A.内核开启IP转发和允许非本地IP绑定功能,如果是使用LVS的DR模式还需设置两个arp相关的参数。

B.如果Keepalived所在网络不允许使用组播,可以使用VRRP单播

C.需要注意主备的weight和priority的值,这两个值如果设置不合理可能会影响VIP的切换。

D.如果使用的配置文件不是默认的配置文件,在启动Keepalived的时候需要使用-f 参数指定配置文件。CentOS 6.3下Haproxy+Keepalived+Apache配置笔记https://www.sodocs.net/doc/c210887482.html,/Linux/2013-06/85598.htm Haproxy + KeepAlived 实现WEB群集on CentOS 6 https://www.sodocs.net/doc/c210887482.html,/Linux/2012-03/55672.htm Keepalived+Haproxy配置高可用负载均衡https://www.sodocs.net/doc/c210887482.html,/Linux/2012-03/56748.htm

Haproxy+Keepalived构建高可用负载均衡https://www.sodocs.net/doc/c210887482.html,/Linux/2012-03/55880.htm

CentOS 7 上配置LVS + Keepalived + ipvsadm https://www.sodocs.net/doc/c210887482.html,/Linux/2014-11/109237.htm Keepalived高可用集群搭建https://www.sodocs.net/doc/c210887482.html,/Linux/2014-09/106965.htm

Keepalived 的详细介绍:请点这里

Keepalived 的下载地址:请点这里

本文永久更新链接地址:https://www.sodocs.net/doc/c210887482.html,/Linux/2015-03/114981.htm

今天编译安装keepalived后,启动报错keepalived:/bin/bash: keepalived: command not found

回想安装过程安装时是通过prefix指定路径了的。那么keepalived可执行文件应该是在指定的prefix/sbin目录下面。建立symbol linkI(ln -s prefix/sbin/keepalived /usr/sbin/)即可解决keepalived:/bin/bash: keepalived: command not found这个问题。

另外还有一个问题。如果安装该软件的服务器是非常规服务器,也会报该错误。笔者使用的是openvz的vps,ip a输出内容如下:

1: lo:

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

3: venet0:

inet 127.0.0.1/32 scope host venet0

inet 0.0.0.135/32 brd 0.0.0.135 scope global venet0:0

这样本来网卡上绑定了类似keepalived的ip地址。

能力有限,暂时就这样认为,非常规类服务器不能安装keepalived实现高可用。

会报错keepalived:/bin/bash: keepalived: command not found

测试环境

写道

A:192.168.0.219 (ubuntu)

B:192.168.0.8 (FreeBsd)

不知道一个unix,一个linux行不行.keepalived好像对linux核心有要求.

二台机器都要做keepalived的配置呀..

keepalived是一个的IPVS包装和健康检查服务.FreeBSD的端口不支持keepalived的VRRP协议栈. :( 用linux双机测试,将来可以布署双linux主机

更改测试环境

写道

A:192.168.0.219 (ubuntu)

B:192.168.0.19 (CentOs)

这二台机已经互为主从

keepalived安装

写道

现在keepalived的最新版本是1.2.2

wget https://www.sodocs.net/doc/c210887482.html,/software/keepalived-1.2.2.tar.gz

tar zxvf keepalived-1.2.2.tar.gz

A(ubuntu)安装keepalived:

xiaofei@xiaofei-desktop:~/keepalived-1.2.2$ ./configure

configure: error:

!!! OpenSSL is not properly installed on your system. !!!

!!! Can not include OpenSSL headers files.

配置出错,提示openssl安装得不正确.

执行:sudo apt-get install libssl-dev 这个错误解决.又出现新的错误.

checking for poptGetContext in -lpopt... no

configure: error: Popt libraries is required

执行:sudo apt-get install libpopt-dev 解决

configure: creating ./config.status

config.status: creating Makefile

config.status: creating genhash/Makefile

config.status: WARNING: 'genhash/Makefile.in' seems to ignore the --datarootdir setting config.status: creating keepalived/core/Makefile

config.status: creating keepalived/include/config.h

config.status: creating keepalived.spec

config.status: creating keepalived/Makefile

config.status: WARNING: 'keepalived/Makefile.in' seems to ignore the --datarootdir setting config.status: creating lib/Makefile

config.status: creating keepalived/vrrp/Makefile

Keepalived configuration

------------------------

Keepalived version : 1.2.2

Compiler : gcc

Compiler flags : -g -O2

Extra Lib : -lpopt -lssl -lcrypto

Use IPVS Framework : No

IPVS sync daemon support : No

Use VRRP Framework : Yes

Use Debug flags : No

然后:make && sudo make install

xiaofei@xiaofei-desktop:~$ whereis keepalived

keepalived: /usr/local/sbin/keepalived /usr/local/etc/keepalived

B(CentOs)安装keepalived:

由于这台CentOs是台新机,需要安装的东西估计多了.

[root@centos keepalived-1.2.2]# ./configure

checking for gcc... no

checking for cc... no

checking for cl.exe... no

configure: error: in `/root/keepalived/keepalived-1.2.2':

configure: error: no acceptable C compiler found in $PATH

GCC错误yum install gcc 解决

checking openssl/ssl.h usability... no

checking openssl/ssl.h presence... no

checking for openssl/ssl.h... no

configure: error:

!!! OpenSSL is not properly installed on your system. !!!

!!! Can not include OpenSSL headers files. !!!

OpenSSL错误yum install openssl openssl-devel 解决

checking for poptGetContext in -lpopt... no

configure: error: Popt libraries is required

Popt错误yum install popt popt-devel 解决

configure: creating ./config.status

config.status: creating Makefile

config.status: creating genhash/Makefile

config.status: WARNING: 'genhash/Makefile.in' seems to ignore the --datarootdir setting config.status: creating keepalived/core/Makefile

config.status: creating keepalived/include/config.h

config.status: creating keepalived.spec

config.status: creating keepalived/Makefile

config.status: WARNING: 'keepalived/Makefile.in' seems to ignore the --datarootdir setting config.status: creating lib/Makefile

config.status: creating keepalived/vrrp/Makefile

Keepalived configuration

------------------------

Keepalived version : 1.2.2

Compiler : gcc

Compiler flags : -g -O2

Extra Lib : -lpopt -lssl -lcrypto

Use IPVS Framework : No

IPVS sync daemon support : No

Use VRRP Framework : Yes

Use Debug flags : No

然后make && make install 即可

[root@centos keepalived-1.2.2]# whereis keepalived

keepalived: /usr/local/sbin/keepalived /usr/local/etc/keepalived

keepalived再次安装

写道

从先前安装keepalived我们看到,Use IPVS Framework:NO 应该要安装lvs A:sudo apt-get install ipvsadm

B:yum install ipvsadm

A:

./configure --with-kernel-dir=/usr/src/linux-headers-2.6.32-41-generic

发现还有一个警告:

checking for nl_handle_alloc in -lnl... no

configure: WARNING: keepalived will be built without libnl support.

sudo apt-get install libnl-dev

重新编译了一次包括make &&make install

Keepalived configuration

------------------------

Keepalived version : 1.2.2

Compiler : gcc

Compiler flags : -g -O2

Extra Lib : -lpopt -lssl -lcrypto -lnl

Use IPVS Framework : Yes

IPVS sync daemon support : Yes

IPVS use libnl : Yes

Use VRRP Framework : Yes

Use Debug flags : No

不知道有没有效..

B:

./configure --with-kernel-dir=/usr/src/kernels/2.6.32-220.el6.x86_64

checking for nl_handle_alloc in -lnl... no

configure: WARNING: keepalived will be built without libnl support.

yum install libnl-devel

还有错误

checking net/ip_vs.h usability... no

checking net/ip_vs.h presence... no

checking for net/ip_vs.h... no

configure: WARNING: keepalived will be built without LVS support.

yum install kernel-devel

安装完后kernel也变了: 2.6.32-220.17.1.el6.x86_64

./configure --with-kernel-dir=/usr/src/kernels/2.6.32-220.17.1.el6.x86_64

Keepalived configuration

------------------------

Keepalived version : 1.2.2

Compiler : gcc

Compiler flags : -g -O2

Extra Lib : -lpopt -lssl -lcrypto -lnl

Use IPVS Framework : Yes

IPVS sync daemon support : Yes

IPVS use libnl : Yes

Use VRRP Framework : Yes

Use Debug flags : No

接着make && make install

keepalived配置

Python代码

1.我们自己在新建一个配置文件,默认情况下keepalived启动时会去/etc/keepalived目录下找配置文

件

2.

3.A:

4.

5.cd /etc/keepalived

6.sudo vim keepalived.conf

7.

8.我的内容:

9.!Configuration File for keepalived

10.

11.global_defs {

12. notification_email {

13. xiaolin0199@https://www.sodocs.net/doc/c210887482.html,

14. }

15. notification_email_from xiaolin0199@https://www.sodocs.net/doc/c210887482.html,

16. smtp_server 127.0.0.1

17. smtp_connect_timeout 30

18. router_id MySQL-ha

19. }

20.

21.vrrp_instance VI_1 {

22. state BACKUP #两台配置此处均是BACKUP

23. interface eth0

24. virtual_router_id 51

25. priority 100 #优先级,另一台改为90

26. advert_int 1

27. nopreempt #不抢占,只在优先级高的机器上设置即可,优先级低的机器不设置

28. authentication {

29. auth_type PASS

30. auth_pass 1111

31. }

32. virtual_ipaddress {

33. 192.168.0.200

34. }

35. }

36.

37.virtual_server 192.168.0.200 3306 {

38. delay_loop 2 #每个2秒检查一次real_server状态

39. lb_algo wrr #LVS算法

40. lb_kind DR #LVS模式

41. persistence_timeout 60 #会话保持时间

42. protocol TCP

43. real_server 192.168.0.219 3306 {

44. weight 3

45. notify_down /usr/local/MySQL/bin/MySQL.sh #检测到服务down后执行的脚本

46. TCP_CHECK {

47. connect_timeout 10 #连接超时时间

48. nb_get_retry 3 #重连次数

49. delay_before_retry 3 #重连间隔时间

50. connect_port 3306 #健康检查端口

51. }

52. }

53. }

54.

55.编写检测服务down后所要执行的脚本

56.

57. cd /usr/local

58. mkdir MySQL

59. cd MySQL

60. mkdir bin

61.

62.#sudo vim /usr/local/MySQL/bin/MySQL.sh

63.内容:

64.#!/bin/sh

65. pkill keepalived

66.

67.放开权限:

68.#chmod +x /usr/local/MySQL/bin/MySQL.sh

69.

70.注:此脚本是上面配置文件notify_down选项所用到的,keepalived使用notify_down选项来检查

real_server的服务状态,当发现real_server服务故障时,便触发此脚本;

71.我们可以看到,脚本就一个命令,通过pkill keepalived强制杀死keepalived进程,从而实现

了MySQL故障自动转移。

72.

73.启动keepalived

74.

75.#sudo /usr/local/sbin/keepalived –D

76.

77.接着我们来ping一下我们设置的虚拟ip(192.168.0.200)

78.

79.[root@centos etc]# ping 192.168.0.200

80.PING 192.168.0.200 (192.168.0.200) 56(84) bytes of data.

81.64 bytes from 192.168.0.200: icmp_seq=1 ttl=64 time=0.951 ms

82.64 bytes from 192.168.0.200: icmp_seq=2 ttl=64 time=0.173 ms

83.64 bytes from 192.168.0.200: icmp_seq=3 ttl=64 time=0.204 ms

84.64 bytes from 192.168.0.200: icmp_seq=4 ttl=64 time=0.175 ms

85.64 bytes from 192.168.0.200: icmp_seq=5 ttl=64 time=0.138 ms

86.

87.此时keepalived状态是正在运行中

88.xiaofei@xiaofei-desktop:~$ ps ax | grep keepalived

89.17617 ? Ss 0:00 /usr/local/sbin/keepalived -D

90.17618 ? S 0:00 /usr/local/sbin/keepalived -D

91.17619 ? S 0:00 /usr/local/sbin/keepalived -D

92.17622 pts/1 S+ 0:00 grep --color=auto keepalived

93.

94.我停掉该机的mysql服务: sudo /etc/init.d/mysql stop

95.

96.再来看看keepallived的状态

97.xiaofei@xiaofei-desktop:~$ ps ax | grep keepalived

98.17669 pts/1 S+ 0:00 grep --color=auto keepalived

99.

100.我们发现当keepalived发现服务不响应时,自动杀死了keepalived进程...

101.

102.

103.B: B的配置跟A一样,只是keepalived.conf文件有三个地方与A不同 : 优先级为90、无抢占设置、real_server为本机IP

104.

105.测试一下

106.

107.一直不成功,查看日志 tail -f /var/log/message

108.

109.Jun 6 16:15:59 centos Keepalived_healthcheckers: Opening file '/etc/keepali ved/keepalived.conf'.

110.Jun 6 16:15:59 centos Keepalived_healthcheckers: Configuration is using : 1 1671 Bytes

111.Jun 6 16:15:59 centos Keepalived: Healthcheck child process(20866) died: Re spawning

112.Jun 6 16:15:59 centos Keepalived: Starting Healthcheck child process, pid=2 0868

113.Jun 6 16:15:59 centos Keepalived_healthcheckers: IPVS: Can't initialize ipv s: Protocol not available

114.Jun 6 16:15:59 centos Keepalived_healthcheckers: Registering Kernel netlin k reflector

115.Jun 6 16:15:59 centos Keepalived_healthcheckers: Registering Kernel netlin k command channel

116.

117.

118.A服务器中的日志是这样:

119.Jun 6 16:18:02 xiaofei-desktop Keepalived_vrrp: VRRP_Instance(VI_1) Receive

d lower prio advert, forcing new election

120.Jun 6 16:18:02 xiaofei-desktop Keepalived_vrrp: VRRP_Instance(VI_1) Sendin

g gratuitous ARPs on eth0 for 192.168.0.200

121.Jun 6 16:18:03 xiaofei-desktop Keepalived_vrrp: VRRP_Instance(VI_1) Receive

d lower prio advert, forcing new election

122.Jun 6 16:18:03 xiaofei-desktop Keepalived_vrrp: VRRP_Instance(VI_1) Sendin

g gratuitous ARPs on eth0 for 192.168.0.200

123.

124.

125.IPVS: Can't initialize ipvs: Protocol not available,应该是这个原因...

126.

127.GG一下:打个补丁 modprobe -q ip_vs||true

128.

129.再看日志:

130.Jun 6 16:30:30 centos Keepalived_healthcheckers: Configuration is using : 1 1651 Bytes

centos 安装keepalived1.3.5并配置nginx

centos 安装keepalived-1.3.5并配置nginx Keepalived是一个免费开源的,用C编写的类似于layer3, 4 & 7交换机制软件,具备我们平时说的第3层、第4层和第7层交换机的功能。主要提供loadbalancing(负载均衡)和high-availability(高可用)功能,负载均衡实现需要依赖Linux的虚拟服务内核模块(ipvs),而高可用是通过VRRP协议实现多台机器之间的故障转移服务。 官网源码包下载地址(2017-07-31 Keepalived最新版 keepalived-1.3.5.tar.gz):https://www.sodocs.net/doc/c210887482.html,/download.html

1.# service keepalived start 错误如下 Job for keepalived.service failed because a configured resource limit was exceeded. See "systemctl status keepalived.service" and "journalctl -xe" for details. Job for keepalived.service failed because a configured resource limit was exceeded. See "systemctl status keepalived.service" and "journalctl -xe" for details. 查看错误

1.# systemctl status keepalived.service 我们可以看到日志,是写日志出了问题 1.[root@zk-02 sbin]# systemctl status keepalived.service 2.● keepalived.service - LVS and VRRP High Availability Monitor 3. Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled ; vendor preset: disabled) 4. Active: failed (Result: resources) since Fri 2017-08-04 15:32:31 CS T; 4min 59s ago 5. Process: 16764 ExecStart=/usr/local/program/keepalived/sbin/keepaliv ed $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS) 6. 7.Aug 04 15:32:25 zk-02 Keepalived_healthcheckers[16768]: Activating hea lthchecker for service [10.10.10.2]:1358 8.Aug 04 15:32:25 zk-02 Keepalived_healthcheckers[16768]: Activating hea lthchecker for service [10.10.10.3]:1358 9.Aug 04 15:32:25 zk-02 Keepalived_healthcheckers[16768]: Activating hea lthchecker for service [10.10.10.3]:1358 10.Aug 04 15:32:25 zk-02 Keepalived_vrrp[16769]: (VI_1): No VIP specified ; at least one is required 11.Aug 04 15:32:26 zk-02 Keepalived[16766]: Keepalived_vrrp exited with p ermanent error CONFIG. Terminating 12.Aug 04 15:32:26 zk-02 Keepalived[16766]: Stopping 13.Aug 04 15:32:31 zk-02 systemd[1]: keepalived.service never wrote its P ID file. Failing. ##写日志出了问题 14.Aug 04 15:32:31 zk-02 systemd[1]: Failed to start LVS and VRRP High Av ailability Monitor. 15.Aug 04 15:32:31 zk-02 systemd[1]: Unit keepalived.service entered fail ed state. 16.Aug 04 15:32:31 zk-02 systemd[1]: keepalived.service failed. 17.[root@zk-02 sbin]# vi /var/run/keepalived.pid 18.[root@zk-02 sbin]# vim /lib/systemd/system/keepalived.service 19.[root@zk-02 sbin]# vim /lib/systemd/system/keepalived.service 查看keepalived.service [html]view plain copy 1.# vi /lib/systemd/system/keepalived.service

负载均衡--LVS+Keepalived

利用LVS+Keepalived 实现高性能高可用负载均衡 作者:NetSeek 网站: https://www.sodocs.net/doc/c210887482.html, 背景: 随着你的网站业务量的增长你网站的服务器压力越来越大?需要负载均衡方案!商业的硬件如F5又太贵,你们又是创业型互联公司如何有效节约成本,节省不必要的浪费?同时实现商业硬件一样的高性能高可用的功能?有什么好的负载均衡可伸张可扩展的方案吗?答案是肯定的!有!我们利用LVS+Keepalived基于完整开源软件的架构可以为你提供一个负载均衡及高可用的服务器。 一.L VS+Keepalived 介绍 1.LVS LVS是Linux Virtual Server的简写,意即Linux虚拟服务器,是一个虚拟的服务器集群系统。本项目在1998年5月由章文嵩博士成立,是中国国内最早出现的自由软件项目之一。目前有三种IP负载均衡技术(VS/NA T、VS/TUN和VS/DR); 八种调度算法(rr,wrr,lc,wlc,lblc,lblcr,dh,sh)。 2.Keepalvied Keepalived在这里主要用作RealServer的健康状态检查以及LoadBalance主机和BackUP主机之间failover的实现 二. 网站负载均衡拓朴图 . IP信息列表: 名称IP LVS-DR-Master 61.164.122.6 LVS-DR-BACKUP 61.164.122.7 LVS-DR-VIP 61.164.122.8 WEB1-Realserver 61.164.122.9 WEB2-Realserver 61.164.122.10 GateWay 61.164.122.1

keepalived监控应用程序端口

keepalived监控类型有三种,最常见的两种就是主服务器网络不通和主服务器keepalived服务down掉,备份机能够自动接管。 还有一种类型是监控应用程序的服务状态,可以用vrrp_script 实现,例如监控postgreSQL端口5432。 主服务器配置 vim /etc/keepalived/keepalived.conf global_defs { router_id HA_1 } vrrp_script chk_postgreSQL_port { script "/tcp/127.0.0.1/5432" interval 1 weight -30 } vrrp_instance VI_1 { state MASTER interface eth0 virtual_router_id 1 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 123456 } virtual_ipaddress { 192.168.8.254

track_script { chk_postgreSQL_port } } 备用服务器配置 vim /etc/keepalived/keepalived.conf global_defs { router_id HA_1 } vrrp_script chk_postgreSQL_port { script "/tcp/127.0.0.1/5432" interval 1 weight -30 } vrrp_instance VI_1 { state MASTER interface eth0 virtual_router_id 1 priority 90 advert_int 1 authentication { auth_type PASS auth_pass 123456 } virtual_ipaddress { 192.168.8.254

实现keepalived的功能

Keepalived keepalived的作用RealServer的健康状态检查以及LoadBalance主机和BackUP主机之间failover的实现。 realsercer:服务器池web服务器 LoadBalance:负载均衡器 BackUP:被负载均衡器 IP配置 试验环境:虚拟机两台redhat 5 使用原码包安装 服务器IP地址 keepalived主服务器真实IP:192.168.150.13 keepalived从服务器真实IP:192.168.150.103 漂浮IP虚拟IP:192.168.150.20 配置主keepalived服务器的IP,配置网卡ifcfg-eth0服务器的第一张网卡 网卡位置vi /etc/sysconfig/network-scripts/ifcfg-eth0 把BOOTPROTO改为static静态地址 增加IPADDR 此项为IP地址为网卡的网段后缀可以自己编译 增加子网掩码网关占时可不加 注:如果做lvs的nat模式需要增加网关。(lvs是一个虚拟服务,做负载均衡) 配置从keepalived

网卡位置vi /etc/sysconfig/network-scripts/ifcfg-eth0 从Keepalived上的eth0配置文件 keepalived的安装 下载原码包keepalived-1.1.18.tar.gz解压 tar -xvf keepalived-1.1.18.tar.gz ./configure 编译 如果要做lvs需要红色部分都为yes 如遇到错误可以增加一条声明用的内核版本 [root@localhost keepalived-1.1.18]# vi /usr/src/kernels/2.6.18-194.el5-x86_64/include/linux/version.h 增加红色部分的一条在继续执行编译./configure编译 继续执行make && make install (&&意思为执行完make继续执make install)

keepalived安装文档

keepalived安装文档 1.安装依赖 su - root yum -y install kernel-devel* yum -y install openssl-* yum -y install popt-devel yum -y install lrzsz yum -y install openssh-clients 2.安装keepalived 2.1.上传 1、cd /usr/local 2、rz –y 3、选择keepalived安装文件 2.2.解压 tar –zxvf keepalived-1.2.2.tar.gz 2.3.重命名 mv keepalived-1.2.2 keepalived 2.4.安装keepalived 1、cd keepalived 2、执行命令 ./configure --prefix=/usr/local/keepalived -enable-lvs-syncd --enable-lvs --with-kernel-dir=/lib/modules/2.6.32-431.el6.x86_64/build 3、编译 make 4、安装 make install

2.5.配置服务和加入开机启动 cp /usr/local/keepalived/etc/rc.d/init.d/keepalived /etc/init.d/ cp /usr/local/keepalived/etc/sysconfig/keepalived /etc/sysconfig/ mkdir -p /etc/keepalived cp /usr/local/keepalived/etc/keepalived/keepalived.conf /etc/keepalived/ ln -s /usr/local/keepalived/sbin/keepalived /sbin/ chkconfig keepalived on 2.6.修改配置文件 1、vi /etc/keepalived/keepalived.conf 2

Keepalived学习笔记(LVS+Keepalived)

Keepalived学习笔记 --LVS+Keepalived 学习LVS,自然会想到解决DR单独故障问题,Heartbeat也能很好实现,不过对于LVS,Keepalived显得更加理想选择。理由:相比Hearbeat ,Keepalived配置更加简单,故障切换速度也最快。Keepalived只想安装DR服务器上,并根据需要进配置即可。 Keealived简介 来自百度本科的简介,我觉得就就很简洁和清楚了。 keepalived是一个类似于layer3, 4 & 5交换机制的软件,也就是我们平时说的第3层、第4层和第5层交换。Keepalived的作用是检测web服务器的状态,如果有一台web服务器死机,或工作出现故障,Keepalived将检测到,并将有故障的web服务器从系统中剔除,当web服务器工作正常后Keepalived自动将web服务器加入到服务器群中,这些工作全部自动完成,不需要人工干涉,需要人工做的只是修复故障的web服务器。 Layer3,4&5工作在IP/TCP协议栈的IP层,TCP层,及应用层,原理分别如下: Layer3:Keepalived使用Layer3的方式工作式时,Keepalived会定期向服务器群中的服务器 发送一个ICMP的数据包(既我们平时用的Ping程序),如果发现某台服务的IP地址没有激活,Keepalived便报告这台服务器失效,并将它从服务器群中剔除,这种情况的典型例子是某台服务器被非法关机。Layer3的方式是以服务器的 IP地址是否有效作为服务器工作正常与否的标准。在本文中将采用这种方式。 Layer4:如果您理解了Layer3的方式,Layer4就容易了。Layer4主要以 TCP端口的状态来决定服务器工作正常与否。如web server的服务端口一般是80,如果Keepalived检测到80端口没有启动,则Keepalived将把这台服务器从服务器群中剔除。 Layer5:Layer5就是工作在具体的应用层了,比Layer3,Layer4要复杂一点,在网络上占用的带宽也要大一些。Keepalived将根据用户的设定检查服务器程序的运行是否正常,如果与用户的设定不相符,则 Keepalived将把服务器从服务器群中剔除。

keepalived编译安装配置自启动

Centos配置Keepalived 做双机热备切换 分类:网站架构2009-07-25 13:53 7823人阅读评论(0) 收藏举报centosserverdelayauthenticationsnscompiler Keepalived 系统环境: ************************************************************ 两台服务器都装了CentOS-5.2-x86_64系统 Virtual IP: 192.168.30.20 Squid1+Real Server 1:网卡地址(eth0):192.168.30.12 Squid2+Real Server 2:网卡地址(eth0):192.168.30.13 ************************************************************ 软件列表: keepalived https://www.sodocs.net/doc/c210887482.html,/software/keepalived-1.1.17.tar.gz openssl-devel yum -y install openssl-devel *************************************************************** 配置: 配置基于高可用keepalived,确定LVS使用DR模式 1.安装配置keepalived 1.1安装依赖软件如果系统为基本文本安装,需要安装一下软件 # yum -y install ipvsadm # yum -y install kernel kernel-devel # reboot 重启系统切换内核 # yum -y install openssl-devel ;安装keepalived依赖软件 #ln -s /usr/src/kernels/`uname -r`-`uname -m`/ /usr/src/linux

Keepalived

目录 1.1 Keepallived 高可用集群介绍 (1) 1.1.1 keepalived服务介绍 (1) 1.1.2 keepalived 服务两大用途:healthcheck & failover (1) 1.1.2.1 LVS directors failover 功能 (1) 1.1.3 keepalived故障切换转义原理介绍 (1) 1.1.4 VRRP协议简单介绍 (2) 1.2 部署安装keepalived服务 (3) 1.2.1 下载keepalived安装包 (3) 1.2.2 安装keepalived实践 (3) 1.2.3 配置keepalived规范启动 (4) 1.2.4 配置keepalived.conf文件 (4) 1.3 配置keepalived日志 (6) 1.1 Keepallived 高可用集群介绍 1.1.1 keepalived服务介绍 keepalived 起初是专为LVS设计的,专门用来监控LVS集群系统中各个服务节点的状态,后来又加入了VRRP的功能,因此除了配合LVS服务外,也可以作为其他服务(nginx,haproxy)的高可用软件,VRRP 是virtual Router Redundancy Protocol(虚拟路由器冗余协议)的缩写,VRRP 出现的目的就是为了解决静态路由出现的单点故障问题,它能够保证网络的不间断、稳定的运行。所以,keepalived一方面具有LVS cluster nodes healthchecks功能,另一方面也具有LVS directors failover功能。 1.1.2 keepalived 服务两大用途:healthcheck & failover 1.1. 2.1 LVS directors failover 功能 ha failover 功能:实现LB master 主机和Backup 主机之间故障转移和自动切换。 这是针对有两个负载均衡器Director 同时工作而采取的故障转移措施。当主负载均衡器(MASTER)失效或出现故障时,备份负载均衡器(BACKUP)将自动接管负载均衡器的所有工作(VIP 资源及相应服务);一旦主负载均衡器(MASTER)故障修复,MASTER又会接管会它原来处理的工作,而备份负载均衡器(BACKUP)会释放master失效时,它接管的工作,此时两者将恢复到最初各自的角色状态。 1.1.3 keepalived故障切换转义原理介绍 keeplived Directors 高可用对之间的故障切换转移,是通过VRRP协议(Virtual Router Redundancy

使用keepalived实现对mysql主从复制的主备自动切换

keepalived实现对mysql主从复制的主备自动切换使用MySQL+keepalived是一种非常好的解决方案,在MySQL-HA环境中,MySQL 互为主从关系,这样就保证了两台MySQL数据的一致性,然后用keepalived实现虚拟IP,通过keepalived自带的服务监控功能来实现MySQL故障时自动切换。 实验环境中用两台主机搭建了一个mysql主从复制的环境,两台机器分别安装了keepalived,用一个虚IP实现mysql服务器的主备自动切换功能. 模拟环境: VIP:192.168.1.197 :虚拟IP地址 Master:192.168.1.198 :主数据库IP地址 Slave:192.168.1.199 :从数据库IP地址 备注:MySQL的主从同步配置不在此文档中说明(前提:主从同步已完成) 安装步骤: 1、keepalived的安装 Yum install -y keepalived Chkconfig keepalived on 2、keepalived.conf文件的配置 Master:keepalived.conf vi /etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { notification_email { kenjin@https://www.sodocs.net/doc/c210887482.html, } notification_email_from kenjin@https://www.sodocs.net/doc/c210887482.html, smtp_connect_timeout 3 smtp_server https://www.sodocs.net/doc/c210887482.html,

keepalived中vrrp实现分析

Keepalived中vrrp实现分析 作者——Jupiter 1.处理流程 详细说明: 1.Vrrp通过start_vrrp_child ()函数启动,在该函数中,创建起个链表unuse、event、ready、 child、timer、write、read; 2.start_vrrp_child()函数调用start_vrrp()函数,正式启动vrrp。在start_vrrp()函数中,读取 配置文件获取配置信息。然后,通过接口thread_add_event()从unuse链表中取出一块空间,在这块空间中添加第一个事件,事件回调函数为vrrp_dispatcher_init(),最后将该空间加入到event链表中。至此,start_vrrp()函数结束; 3.之后,调用launch_scheduler()接口。该接口中,循环调用thread_fetch()函数,监控七 个链表,取出需要执行的回调函数。

?在thread_fetch()函数中,先检查event链表中事件,并取出执行回调函数; ?如果event链表中没有需要处理的事件,再检查ready中事件,并取出执行回调函 数; ?如果ready链表中没有需要处理的事件,再检查其他四个链表timer、read、write、 child中事件,并取出放入ready链表中等待处理。 ?event和ready链表处理完成,把使用完的空间放入unuse链表中。 4.在步骤2中提到,start_vrrp()注册第一个事件,回调函数vrrp_dispatcher_init()。 thread_fetch()函数获取后,首先执行该回调函数。在该函数中,创建接收和发送socket。 ?接收socket句柄为fd_in:此socket为ROW socket,创建完成后被加入到vrrp组播 组中,并绑定到指定网络设备。 ?发送socket句柄为fd_out:此socket为ROW socket,创建完成后设置socket属性 为IP_HDRINCL(抓起数据包中包含ip报头),设置socket为IP_MULTICAST_LOOP (组播),并绑定到指定网络设备。 5.然后,根据fd_in的值添加一个回调函数到指定链表。 ?如果fd_in=-1,通过thread_add_timer()添加定时处理到timer链表,回调函数为 vrrp_read_dispatcher_thread(); ?如果fd_in=-1,通过thread_add_read ()添加读取处理到read链表,回调函数为 vrrp_read_dispatcher_thread(); 6.thread_fetch()函数检查所有链表,最中执行vrrp_read_dispatcher_thread()函数。 vrrp_read_dispatcher_thread()函数会根据fd_in的状态和当前thread->type,决定是读取数据包,还是从本地保存的数据中查找一个vrrp实体。 7.查找到vrrp实体后,调用VRRP_FSM_READ_TO(vrrp)或者VRRP_FSM_READ(vrrp)执行状 态机。在状态机中,根据当前状态进行相应处理; ?初始状态(Initialize) ◆如果本地优先级为255,也就是说自己是IP拥有者路由器,那么接下来它会: ●发送VRRP通告报文; ●广播免费ARP请求报文,内部封装是虚拟MAC和虚拟IP的对应,有几个 虚拟IP地址,那么就发送几个免费ARP请求报文; ●启动一个Adver_Timer计时器,初始值为Advertisement_Interval(缺省是 1秒),当该计时器超时后,会发送下一个VRRP通告报文;

keepalived配置文件详解

keepalived配置文件详解 keepalived配置文件详解 2010-12-27 13:40global_defs { notification_email { #指定keepalived在发生切换时需要发送email到的对象,一行一个 sysadmin@fire.loc } notification_email_from Alexandre.Cassen@firewall.loc #指定发件人 smtp_server localhost #指定smtp服务器地址 smtp_connect_timeout 30 #指定smtp连接超时时间 router_id LVS_DEVEL #运行keepalived机器的一个标识 } vrrp_sync_group VG_1{ #监控多个网段的实例 group { inside_network #实例名 outside_network } notify_master /path/xx.sh #指定当切换到master时,执行的脚本

netify_backup /path/xx.sh #指定当切换到backup时,执行的脚本 notify_fault "path/xx.sh VG_1" #故障时执行的脚本 notify /path/xx.sh smtp_alert #使用global_defs中提供的邮件地址和smtp服务器发送邮件通知 } vrrp_instance inside_network { state BACKUP #指定那个为master,那个为backup,如果设置了nopreempt这个值不起作用,主备考priority决定 interface eth0 #设置实例绑定的网卡 dont_track_primary #忽略vrrp的interface错误(默认不设置) track_interface{ #设置额外的监控,里面那个网卡出现问题都会切换 eth0 eth1 } mcast_src_ip #发送多播包的地址,如果不设置默认使用绑定网卡的primary ip garp_master_delay #在切换到master状态后,延迟进

LVS+Keepalived部署全解

安装与配置 两台负载均衡器: Lvs1:192.168.1.10 Lvs2:192.168.1.11 漂移地址(虚拟IP,VIP): Vip:192.168.1.169 Real Server: RS1:192.168.1.102 RS2:192.168.1.103 LVS配置及ipvsadm和keepalived的安装 在lvs master和lvs backup主机上安装。 1.首先安装一些辅助package如下: e2fsprogs-devel-1.41.12-18.el6.x86_64.rpm kernel-devel-2.6.32-642.el6.x86_64.rpm keyutils-libs-devel-1.4-4.el6.x86_64.rpm krb5-devel-1.10.3-10.el6_4.6.x86_64.rpm libcom_err-devel-1.41.12-18.el6.x86_64.rpm libnl-1.1.4-2.el6.x86_64.rpm libnl-devel-1.1.4-2.el6.x86_64.rpm libselinux-devel-2.0.94-5.3.el6_4.1.x86_64.rpm libsepol-devel-2.0.41-4.el6.x86_64.rpm zlib-devel-1.2.3-29.el6.x86_64.rpm openssl-devel-1.0.1e-15.el6.x86_64.rpm pkgconfig-0.23-9.1.el6.x86_64.rpm popt-devel-1.13-7.el6.x86_64.rpm popt-static-1.13-7.el6.i686.rpm 安装时可能出现缺少什么package,去iso中找或者网上下载然后安装就可以了 rpm –ivh XXXXXXXX.rpm 2.然后安装ipvsadm 将ipvsadm-1.26.tar.gz压缩文件复制到/usr/local/src/lvs/文件夹下,然后运行tar zxvf ipvsadm-1.26.tar.gz 命令。创建软连接ln –s /usr/src/kernels/2.6.32-431.el6.x86_64/ /usr/src/linux,然后进去ipvsadm-1.26文件夹cd ipvsadm-1.26,make && make install。 #find / -name ipvsdam 查找的安装位置 检查ipvsadm是否安装成功,可直接输入#ipvsadm IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn 检查模块是否加入内核#lsmod| grep ip_vs ip_vs 78081 0

基于keepalived故障转移(双机热备)

目录 第一章keepalived安装 (1) 第二章配置节点主机 (3) 第三章虚拟IP漂移测试 (4) 第四章通过ARP测试 (5) 第五章基于WEB的应用的测试 (6) 第一章keepalived安装 1、进入10.210.32.37群集主机安装操作系统、挂载光盘镜像、配置本地yum 源 节点1-IP:10.210.32.41 节点2-IP:10.210.32.42 虚拟漂移地址:10.210.32.46 2、进入keepalived官网下载keepalived安装包,下载下载1.4.1 3、将keepalived分别上传到两个节点的/home/kp文件夹下 mkdir kp chmod 775 kp 4、解压keepalived安装包 5、安装keepalived 指定安装路径./configure –prefix=/usr/local/keepalived(提前在/usr/local/ 下建立目录keepalived,并chmod权限) 6、安装openssl包 安装完毕,提示openssl包未安装

我们需要安装openssl-devel的开发包 7、再次运行第5步安装脚本 提示未安装ipvs包,同样需要安装libnl和libnl-3两个开发包 提示头错误,需要安装libnfnetlink的devel包 Yum 源里并没有libnl的包,需要去网上下载,放在/home/kp目录下,再进行安装

8、再次运行第5步安装脚本 提示成功安装 9、进入安装目录 #cd /usr/local/keepalived 查看子目录的完整性,一般包含4个子目录 至此,keepalived安装完毕,同样的步骤安装10.210.32.42即可 第二章配置节点主机 1、配置MASTER主机(10.210.32.41) 将keepalived配置文件拷贝到/etc目录下面,因为启动的时候是进入/etc目录下面去获取配置信息 #cd /usr/local/keepalived/etc/ #cp –r keepalived /etc/ 整体将拷贝到/etc下面 2、配置keepalived.conf文件 进入/etc/keepalived/ 目录下面只有两个文件,一个是keepalived,一个是samples #vi keepalived.conf ! Configuration File for keepalived global_defs { #将global defs,此函数为配置邮件的函数,可以暂时注释掉,保留前后大括号即可 # notification_email { # acassen@firewall.loc # failover@firewall.loc # sysadmin@firewall.loc # } # notification_email_from Alexandre.Cassen@firewall.loc # smtp_server 192.168.200.1 # smtp_connect_timeout 30 # router_id LVS_DEVEL

keepalived原理

Keepalived原理 keepalived也是模块化设计,不同模块复杂不同的功能,下面是keepalived的组件 core check vrrp libipfwc libipvs-2.4 libipvs-2.6 core:是keepalived的核心,复杂主进程的启动和维护,全局配置文件的加载解析等check:负责healthchecker(健康检查),包括了各种健康检查方式,以及对应的配置的解析包括LVS的配置解析 vrrp:VRRPD子进程,VRRPD子进程就是来实现VRRP协议的 libipfwc:iptables(ipchains)库,配置LVS会用到 工作原理: Layer3,4&7工作在IP/TCP协议栈的IP层,TCP层,及应用层,原理分别如下: Layer3:Keepalived使用Layer3的方式工作式时,Keepalived会定期向服务器群中的服务器发送一个ICMP的数据包(既我们平时用的Ping程序),如果发现某台服务的IP地址没有激活,Keepalived便报告这台服务器失效,并将它从服务器群中剔除,这种情况的典型例子是某台服务器被非法关机。Layer3的方式是以服务器的IP地址是否有效作为服务器工作正常与否的标准。 Layer4:如果您理解了Layer3的方式,Layer4就容易了。Layer4主要以TCP端口的状态来决定服务器工作正常与否。如web server的服务端口一般是80,如果Keepalived检测到80端口没有启动,则Keepalived将把这台服务器从服务器群中剔除。

Layer7:Layer7就是工作在具体的应用层了,比Layer3,Layer4要复杂一点,在网络上占用的带宽也要大一些。Keepalived将根据用户的设定检查服务器程序的运行是否正常,如果与用户的设定不相符,则Keepalived将把服务器从服务器群中剔除。 双机热备应用集群分布式文件系统数据收发可用性保障数据缓存及数据队列 负载均衡数据收发完整性效验 双机热备: 双机热备拓扑图:Active/Standby方式 可支持的操作系统:win2000/2003/2008,Linux 可支持的数据库:SQL,Oracle,Sybase,Notes 等数据库可支持第三方应用程序的保护以Active/Standby方式工作,主机宕机备机可以以最快的速度启动用户的应用。 双机热备拓扑图:Active/Active方式

Keepalived双机热备(LVS+Keepalived高可用性群集)

Keepalived双机热备 (LVS+Keepalived高可用性群集) 一,根据实验要求规划网络拓扑。 注:根据实验要求搭建网络拓扑,具体规划如图。 二,配置Keepalived双机热备主(备)服务器。 注:安装相关支持软件包,编译安装Keepalind。主配置文件在/etc/keepalived/keepalived.conf

注:配置主Keepalived服务器。Notification_email 是邮件相关信息,以邮件的方式向管理员发送程序相关的信息,这里可以不做修改。Router_id本服务器的名称。Vrrp_instance 定义一个实例。State 热备状态MASTER为主,SLAVE为备份。Interface 承载VIP地址的物理接口。Virtual_router_id 虚拟服务器的ID号,一个实例中的所有服务器的Virtual_router_id要保持一致。Priority 优先级,最高为100。Advert_int 通告时间间隔(秒)。Authentication 认证方式,同一个实例的个服务器要保持一致。Virtual_ipaddress漂移地址(VIP)。

注:备份(热备)服务器的配置,需要修改的地方有本服务器名称,热备状态和优先级。(这里使用的是eth5网卡) 注:在主(热备)服务器上可以查看到VIP。 注:备份(热备)服务器上面查看不到VIP。 三,搭建WEB服务器。

注:搭建两台WEB服务器,IP分别为192.168.1.4和192.168.1.5。四,部署LVS+Keepalived高可用性群集。 注:修改内核参数,群集相关请参考“LVS负载均衡群集”。

使用LVS+Keepalived 实现高性能高可用负载均衡实际操作说明

使用LVS+Keepalived 实现高性能高可用负载均衡实际操作说明kkmangnn 网站: https://www.sodocs.net/doc/c210887482.html,/way2rhce 前言: 本文档用keepalived实现负载均衡和高可用性 一. 网站负载均衡拓朴图 . 系统环境:CentOS 5.2 ,需要安装有gcc openssl-devel kernel-devel包 二. 安装LVS和Keepalvied软件包 1. 下载相关软件包 #mkdir /usr/local/src/lvs #cd /usr/local/src/lvs #wget https://www.sodocs.net/doc/c210887482.html,/software/kernel-2.6/ipvsadm-1.24.tar.gz #wget https://www.sodocs.net/doc/c210887482.html,/software/keepalived-1.1.15.tar.gz 2. 安装LVS和Keepalived #lsmod |grep ip_vs #uname -r 2.6.18-92.el5 #ln -s /usr/src/kernels/2.6.18-92.el5-i686/ /usr/src/linux

#tar zxvf ipvsadm-1.24.tar.gz #cd ipvsadm-1.24 #make && make install #tar zxvf keepalived-1.1.15.tar.gz #cd keepalived-1.1.15 #./configure && make && make install 把keepalived做成系统启动服务方便管理 #cp /usr/local/etc/rc.d/init.d/keepalived /etc/rc.d/init.d/ #cp /usr/local/etc/sysconfig/keepalived /etc/sysconfig/ #mkdir /etc/keepalived #cp /usr/local/etc/keepalived/keepalived.conf /etc/keepalived/ #cp /usr/local/sbin/keepalived /usr/sbin/ #service keepalived start|stop 三.配置web服务器的脚本. #vi /usr/local/src/lvs/web.sh #!/bin/bash SNS_VIP=192.168.0.8 case "$1" in start) ifconfig lo:0 $SNS_VIP netmask 255.255.255.255 broadcast $SNS_VIP /sbin/route add -host $SNS_VIP dev lo:0 echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce sysctl -p >/dev/null 2>$1 echo "RealServer Start OK" ;; stop) ifconfig lo:0 down route del $SNS_VIP >/dev/null 2>&1 echo "0" >/proc/sys/net/ipv4/conf/lo/arp_ignore echo "0" >/proc/sys/net/ipv4/conf/lo/arp_announce echo "0" >/proc/sys/net/ipv4/conf/all/arp_ignore echo "0" >/proc/sys/net/ipv4/conf/all/arp_announce echo "RealServer Stoped"

相关文档

- keepalived配置中文说明

- 实现keepalived的功能

- LVS+Keepalived负载均衡实施计划方案

- KeepAlived配置案例

- Keepalived双机热备

- keepalived缓存问题验证

- keepalived配置参数官方文档翻译中文版

- keepalived编译安装配置自启动

- Centos 6.10 Install keepalived-2.0.2

- Keepalived权威指南中文

- keepalived安装配置实做笔记

- keepalived监控应用程序端口

- Keepalived原理与实战精讲

- keepalived中vrrp实现分析

- Keepalived双机热备(LVS+Keepalived高可用性群集)

- keepalived配置文件详解

- 通过Keepalived实现Redis Failover自动故障切换功能

- Keepalived学习笔记(LVS+Keepalived)

- LVS+Keepalived部署全解

- keepalived + nginx