Liu CVPR 2013 - Semi-supervised Node Splitting for Random Forest Construction

Semi-supervised Node Splitting for Random Forest Construction

Xiao Liu?,Mingli Song?,Dacheng Tao?,Zicheng Liu?,Luming Zhang?,Chun Chen?and Jiajun Bu?College of Computer Science,Zhejiang University?

Centre for Quantum Computation and Intelligent Systems,University of Technology Sydney?

Microsoft Research,Redmond?

{ender liux,brooksong,zglumg,chenc,bjj}@https://www.sodocs.net/doc/1c18405958.html,?

dacheng.tao@https://www.sodocs.net/doc/1c18405958.html,?,zliu@https://www.sodocs.net/doc/1c18405958.html,?

Abstract

Node splitting is an important issue in Random Forest but robust splitting requires a large number of training sam-ples.Existing solutions fail to properly partition the fea-ture space if there are insuf?cient training data.In this pa-per,we present semi-supervised splitting to overcome this limitation by splitting nodes with the guidance of both la-beled and unlabeled data.In particular,we derive a non-parametric algorithm to obtain an accurate quality mea-sure of splitting by incorporating abundant unlabeled da-ta.To avoid the curse of dimensionality,we project the data points from the original high-dimensional feature s-pace onto a low-dimensional subspace before estimation.

A uni?ed optimization framework is proposed to select a coupled pair of subspace and separating hyperplane such that the smoothness of the subspace and the quality of the splitting are guaranteed simultaneously.The proposed al-gorithm is compared with state-of-the-art supervised and semi-supervised algorithms for typical computer vision ap-plications such as object categorization and image segmen-tation.Experimental results on publicly available datasets demonstrate the superiority of our method.

1.Introduction

Random Forest(RF)has been applied to various comput-er vision tasks including target tracking,object categoriza-tion and image segmentation.Though it is one of the state-of-the-art classi?ers,its promising performance depends heavily on the size of the labeled data.Because labeling training samples is very time consuming,only a small size of labeled training set is given in some tasks,which usually leads to an obvious performance drop.Thus,sometimes the insuf?ciency of labeled data is a severe challenging issue in the construction of RF.A popular solution to overcome this problem is to introduce abundant unlabeled data to guide the learning,which is known as semi-supervised learning (SSL).However,though many approaches have been given on SSL,few of them are applicable to RF.The only existing representative attempt is the Deterministic Annealing based Semi-Supervised Random Forests(DAS-RF)[14],which treated the unlabeled data as additional variables for margin maximization between different classes.Similar to many other margin maximization methods,?nding the exact so-lution of DAS-RF is NP-hard.Although an ef?cient deter-ministic annealing optimization is used to search an approx-imate solution,it cannot provide robust results ef?ciently. In spite of this,it has been pointed out[26]that the ef-fectiveness of margin maximization based methods for SSL depends heavily on speci?c data distribution which is usu-ally dif?cult to be satis?ed in many applications.Hence,it is desirable to?nd a method that allows RF to utilize the unlabeled data without losing its?exibility.

In this paper,by analyzing the construction of an RF using a small size of labeled training dataset,we?nd that the performance bottleneck is located in the node splitting. From this insight,we tackle the aforementioned problem by introducing abundant unlabeled data to guide the split-ting.Based on kernel density estimation and the law of total probability,we derive a nonparametric algorithm to utilize abundant unlabeled data to obtain an accurate quality mea-sure for node splitting.In particular,to avoid the curse of dimensionality,the data points are projected from the origi-nal high-dimensional feature space onto a low-dimensional subspace before estimating the categorical distributions.Fi-nally,a uni?ed optimization framework is proposed to se-lect a coupled pair of subspace and separating hyperplane for each node such that the smoothness of the subspace and the quality of the splitting are guaranteed simultaneously.

Our contribution is three-fold:

?We experimentally show that node splitting quality is the performance bottleneck for constructing RF with a small size labeled training set.

?We show that partitioning an arbitrary feature space

2013 IEEE Conference on Computer Vision and Pattern Recognition

with a hyperplane can be treated as projecting the data points from the original high-dimensional space onto the one-dimensional subspace that is perpendicular to the separating hyperplane.Thus a uni?ed optimiza-tion framework is presented to choose a coupled pair of subspace and hyperplane such that the subspace is smooth and the hyperplane can effectively partition the feature space.

?We present an ef?cient nonparametric estimation-based semi-supervised splitting method to construct R-F.

2.Related Work

Node splitting is the key issue of tree-based classi?ers. Payne et al.[16]tried to build optimal binary trees such that the least number of tests are required to approach the leaf nodes.However,their tree construction is based on recursive dynamic programming and the algorithm is fea-sible only for a small number of features.Hya?l et al.[12] showed that the optimal sense of minimizing the expect-ed number of tests required to classify an unknown sample is an NP-complete problem.Wu et al.[22]suggested a histogram-based splitting criterion for decision design.The histogram of training data is plotted on each feature axis.

A threshold is selected to partition the classes.A limita-tion of this method is that only few features(usually one) are considered at each stage such that the interaction be-tween features cannot be observed.Rounds[19]proposed Komogorov-Smirnov(K-S)distance and test as the splitting criterion.He suggested that the K-S distance between parts of the partition should be as large as possible.Breiman et al.[5]proposed to use the Gini index as the impurity mea-sure for internal nodes.The goodness of a split is de?ned by the decrease in impurity.Suen et al.[21]proposed an entropy-based splitting criterion for decision tree construc-tion.The entropy measure was later used to construct the well-known ID3decision tree[17].An improved version [18],namely C4.5tree,was proposed in which the normal-ized information gain is used as the criterion of splitting.

All the semi-supervised learning(SSL)methods rely on the smoothness assumption:if two points are close,the corresponding outputs should be similar[7,24].Howev-er,since the feature points are usually in high-dimensional space,one may have to face the curse of dimensionality to directly use the unlabeled data for estimation and thus there is insuf?cient number of observations to obtain a good estimation.Determined by how to address this problem, previous feasible discriminative SSL methods can be cat-egorized into two families.The?rst family relies on the low density separation assumption:the decision boundary should lie in a low-density region.A classi?cation margin for both unlabeled and labeled data is de?ned and maxi-mized through global optimization.Typical methods in this family are Transductive Support Vector Machine(TSVM) [13]and DAS-RF[14].The second family assumes that the high-dimensional data roughly lie on a low-dimensional manifold such that the unlabeled data can be ef?ciently used to infer the structure of the manifold without being troubled by the curse of dimensionality.A typical method in this family is LapSVM[2].Both of the above two families pre-dict the labels of unlabeled data as additional optimization variables,while the proposed method follows another line, i.e.,we project the data onto a low-dimensional subspace such that a small amount of data can give an accurate esti-mation.

3.Pre-analysis

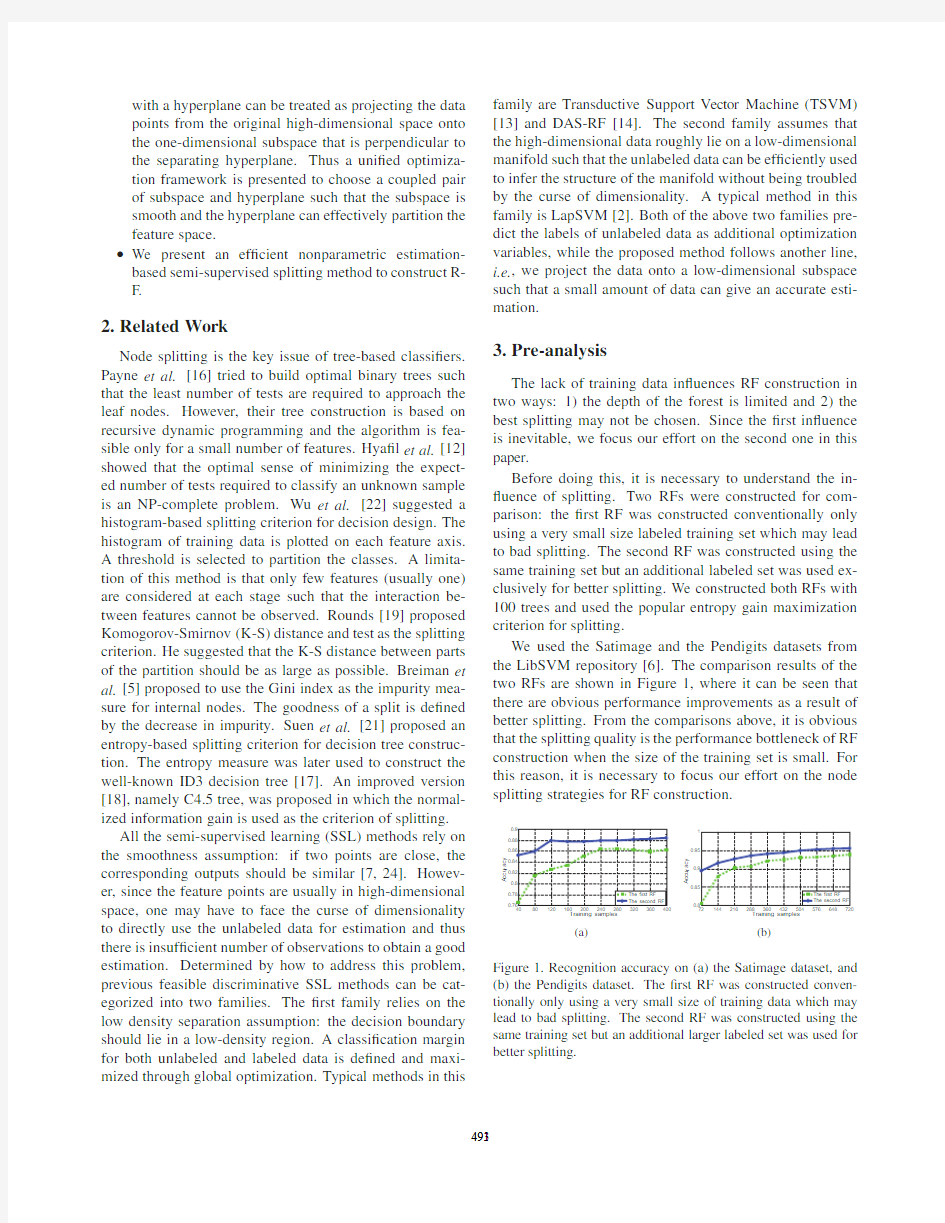

The lack of training data in?uences RF construction in two ways:1)the depth of the forest is limited and2)the best splitting may not be chosen.Since the?rst in?uence is inevitable,we focus our effort on the second one in this paper.

Before doing this,it is necessary to understand the in-?uence of splitting.Two RFs were constructed for com-parison:the?rst RF was constructed conventionally only using a very small size labeled training set which may lead to bad splitting.The second RF was constructed using the same training set but an additional labeled set was used ex-clusively for better splitting.We constructed both RFs with 100trees and used the popular entropy gain maximization criterion for splitting.

We used the Satimage and the Pendigits datasets from the LibSVM repository[6].The comparison results of the two RFs are shown in Figure1,where it can be seen that there are obvious performance improvements as a result of better splitting.From the comparisons above,it is obvious that the splitting quality is the performance bottleneck of RF construction when the size of the training set is small.For this reason,it is necessary to focus our effort on the node splitting strategies for RF construction.

4080120160200240280320360400

0.76

0.78

0.8

0.82

0.84

0.86

0.88

0.9

Training samples

A

c

c

u

r

a

c

y

The first RF

The second RF

(a)

72144216288360432504576648720

0.8

0.85

0.9

0.95

1

Training samples

A

c

c

u

r

a

c

y

The first RF

The second RF

(b)

Figure1.Recognition accuracy on(a)the Satimage dataset,and (b)the Pendigits dataset.The?rst RF was constructed conven-tionally only using a very small size of training data which may lead to bad splitting.The second RF was constructed using the same training set but an additional larger labeled set was used for better splitting.

4.Semi-supervised Node Splitting

RF consists of multiple decision trees:F= {t1,t2,???,t N}and each is independently trained and test-ed.

In the RF construction stage,the algorithm learns a clas-si?cation function?:X→Y using the training sam-ples{x i∈X}i=1???l and the corresponding labels{y i∈Y}i=1???l,where X??M is the feature space and Y= {1???K}is the label set.The trees are usually grown to the greatest possible extent without pruning.Each internal n-ode of RF is binary split with a partition criterion.Each leaf node of RF is a voter which votes for the class into which the most samples fall.

During testing,given a test case x,RF gives the proba-bility estimation for each class as follows

p(k∣x)=1

N

N

∑

i=1

p i(k∣x),(1)

where p i(k∣x)is the probability estimation of class k given by the i t?tree.It is estimated by calculating the ratio that class k gets votes from the leaves in the i t?tree

p i(k∣x)=

l i,k

∑K

j=1

l i,j

,(2)

where l i,k is the number of leaves in the i t?tree that vote for class k.The overall decision function of RF is de?ned as

?=argmax

k∈Y

p(k∣x).(3) 4.1.Semi-supervised Splitting

Considering both accuracy and time cost,oblique linear split is the most popular split strategy.An oblique linear split is expressed as a function of the hyperplane

W?x=θ,(4) where W∈?M andθ∈?are the parameters.When there is only one non-zero element in W,the split strategy focus-es on a single attribute in one node[4,10].Otherwise,the combination of multiple attributes is considered[14,11].

Given the data falling into a node and a candidate hy-perplane,a quality measure needs to be de?ned such that one can search for the best hyperplane to maximize the s-plitting quality.There are four common criteria to evaluate the splitting quality,i.e.,information gain[17],normalized information gain[18],Gini index[5],and Bayesian classi?-cation error[9].As the discussion given in Appendix A,the key procedure of all the four criteria is the estimation of p k, which re?ects the categorical distribution of the k t?class.

Traditionally,we can use the law of total probability to calculate p k

p k=

∫

R

p(k∣x)dp(x).(5) Since both p(x)and p(k∣x)are unknown,?nite labeled samples are used to make the estimation

p k=

1

∑∣R∣

i=1

w R

i

∣R∣

∑

i=1

p(k∣x R

i

)w R

i

,(6)

where w R

i

is the i t?sample falling into a node R and w R

i is its weight.Since all the samples are labeled,p(k∣x R

i

)is either1or0

p(k∣x R

i

)=[y R

i

=k].(7) The problem with the fully supervised splitting is that,

although the distribution p(k∣x R

i

)is given by the labeled sample,the sparse labeled data cannot give a good approx-imation of the marginal distribution which may lead to a worse choice of the separating hyperplane.If only given few labeled samples,for example,one would choose to par-tition the two-dimensional space with the hyperplane shown in Figure2(a).However,its estimation of the probability distribution is not good.In contrast,given more data,a bet-ter splitting can be found,as shown in Figure2(b).

(a)(b)

(c)(d)

Figure2.The red triangles and the blue circles are labeled samples of two classes while the black squares are unlabeled data.If only given a small number of labeled data,one may partition the fea-ture space with the hyperplane shown in(a).When given more la-beled data,one could?nd a better splitting strategy as in(b).Even the abundant data are unlabeled one can still choose the appropri-ate separating hyperplane as in(c)by combining the law of total probability and the kernel-based density estimation.As shown in (d),our method goes a bit further.We carry out the kernel-based density estimation in a one-dimensional subspace of the original feature space.Through this means,the curse of dimensionality can be avoided.

Unfortunately,the insuf?ciency of labeled training da-ta usually leads to a sparse distribution and a bad approxi-mation like Figure2(a).Our solution to overcome the this

limitation is to introduce abundant unlabeled samples to es-timate p k .The law of total probability is still used to calcu-late the probability distribution p k over different classes

?p k =1

∑∣R ∣

i =1w R i

∣R ∣

∑i =1

?p (k ∣x R i )w R i .(8)

Since we now have many more data points,a much bet-ter approximation for the marginal distribution of p (x )in

R can be obtained.A new problem to arise is that the pos-teriori distribution ?p (k ∣x R i )of unlabeled data is unknown.Since there are no priors of the categories,we can estimate ?p (k ∣x R i )as the probability density ratio

?p (k ∣x R i )=p (x R i ∣k )

∑K

j =1p (x R i ∣j ).(9)For p (x R i ∣k ),we apply a kernel-based density estima-tion with Gaussian kernel [20]

K ?(u )=??d (2π)?d/2exp {

?1

2?

2u T u }

,

(10)

where ?is the bandwidth to be determined and d is the data dimension.We then have the following estimation for an unlabeled sample

p (x R i ∣k )=

1n k

∑

y j =k

K ?(x R i ?x j ),(11)where n k is the number of samples that are labeled k .

4.2.Kernel Based Density Estimation on Low-dimensional Subspace

In this part,we derive a convenient formula to select the bandwidth ?.The asymptotic mean squared error (AMISE)criterion [20]is applied to select the optimal bandwidth AMISE (H )=1

4μ22

(K )∫

[tr {H T H p (u )H }]2du (12)

+

∣∣K ∣∣22

n det(H )

,where H is the bandwidth matrix,n is the sample size,H p (u )is the Hessian of the density p (u )to be estimated and μ22(K )is the squared variance of the kernel.In our case,K is the Gaussian kernel and the bandwidth matrix is H =?I d .Since the true density p (x ∣k )is unknown,we adopt the commonly used rule-of-thumb [20]to replace the unknown true density by a reference density q (x ),e.g.,a Gaussian distribution with its covariance matrix equal to the sample covariance.To simplify the calculation,we conduct whitening preprocessing before applying the estimation so that the sample covariance is the identity matrix,and thus q (x )=N (0,I ).Based on the above discussions,AMISE for the conditional density p (x ∣y =k )can be simpli?ed as

AMISE (?)=2d +d 2

2d

+4πd/2+12d πd/2n k ?d

.(13)

Taking the derivative with respect to ?and setting it as 0,we obtain the optimal bandwidth

?opt =(

4

(d +2)n k )1/(4+d ).(14)

Figure 2(c)shows a case where,by combining the law of total probability and the kernel-based density estimation,the appropriate separating hyperplane with abundant unla-beled data can be chosen.

Since the feature points are usually in very high-dimensional space,directly estimating the densities is still not a good idea,not only because of the intractable time complexity but also because there are insuf?cient labeled samples to make a good estimation in the high-dimensional space.

Our solution to this problem is to estimate the densities in a low-dimensional subspace rather than in the original high-dimensional feature space.Note that another way of looking at the hyperplane partition is that the original da-ta are projected onto the one-dimensional subspace that is perpendicular to the separating hyperplane.In particular,by using a hyperplane W ?x =θto partition the feature space,the algorithm makes a projection with the projection function

z =W ?x.

(15)

Furthermore,if the data can be well separated by the hyperplane,it is reasonable to believe that the metric in the subspace will re?ect the intrinsic distance between data points.Thus,the labels of both labeled and unlabeled data distribute smoothly along the subspace.We can therefore estimate the density p (k ∣z )instead of p (k ∣x ).In this case,the Gaussian kernel becomes

K ?(u )=1

?√2πexp {?12?2u 2}

,

(16)and the optimal choice of the bandwidth is ?opt =(4

3n k

)1/5.

(17)

We want to search for a coupled pair of subspace and separating hyperplane such that the smoothness of the sub-space and the quality of the splitting are guaranteed simul-taneously.An alternative optimization strategy is adopted to couple the two procedures by iterating the following two updating steps:

?Project the data onto the given subspace and estimate the categorical distribution.

?Search for a separating hyperplane according to the quality measure.Set the projection subspace as the perpendicular direction to the selected hyperplane.

Notice that we do not have to search for the optimal sep-arating hyperplane,but only need to choose the best one from a candidate sets.We list the details of the proposed method in Algorithm1.

Algorithm1Semi-supervised Splitting

Input:Labeled training data X l that fall into R and the cor-responding labels Y l.

Input:Unlabeled training data X u that fall into R. Output:The parameters of the chosen hyperplane W R and θR.

1:Randomly generate the set of parametersΩfor candi-date hyperplanes.

2:Search for a hyperplane with parameters W0andθ0 that maximize the quality measure considering only la-beled data.

3:Set the iteration number t=0.

4:repeat

5:Set t=t+1.

6:Project all the samples onto the subspace that is per-pendicular to the separating hyperplane:z=W t?x. 7:for each labeled samples x i∈X l do

8:Use the given label as posterior distribution p(k∣z i)=[y i=k].

9:end for

10:for each unlabeled samples x i∈X u do

11:Calculate p(k∣z i)with the kernel-based density es-timation.

12:end for

13:Measure the split quality of each hyperplane.

14:Choose the hyperplane parameters W t andθt that maximize the quality measure.

15:until the chosen separating hyperplane is stable or the algorithm reaches enough iterations.

16:Set W R=W t andθR=θt.

17:Return W R andθR.

5.Random Forest Construction Based on

Semi-supervised Splitting

In the RF construction stage,an individual training set for each tree is generated from the original training set us-ing bootstrap aggregation.The samples which are not cho-sen for training are called Out-Of-Bag(OOB)samples of the tree and can be used for calculating the Out-Of-Bag-Error(OOBE),which is an unbiased estimation of the gen-eralization error.It has been shown that the OOBE is an unbiased estimation of the generalization error[3].Leistner et al.[14]?rst proposed the‘airbag’algorithm to use the OOBE to measure the performance of RF to detect whether the variation to the original algorithm would harm the sys-tem rather than assist.They decided whether to discard or retain a new forest by comparing its OOBE with the previ-ous forest.A shortcoming of the‘airbag’approach is that it regards the RF as a whole,so some trees with high perfor-mance may be dragged down by bad trees.

To overcome the limitation of‘airbag’algorithm,we propose to independently compare the OOBE of single de-cision trees in the supervised and semi-supervised models. In each model,we use a set of labeled bootstrap data to con-struct the supervised decision tree and use the same set of la-beled data and a set of unlabeled bootstrap data to construct the semi-supervised tree.The tree with smaller OOBE will be retained.The overall learning and control procedures of the proposed algorithm are shown in Algorithm2. Algorithm2Semi-supervised Splitting

Input:Labeled training data X l and the corresponding la-bels Y l.

Input:Unlabeled training data X u.

Input:The size of the forest N.

Output:The learned RF F.

1:Initialize an empty forest F.

2:for the i t?decision tree in F do

3:Generate a new labeled set X i l and a new unlabeled set X i u using the bootstrap aggregation.

4:Train the tree with only labeled samples:t i l= trainT ree(X i l).

5:Compute the OOBE:e i l=oobe(t i l,X l?X i l).

6:Train the tree with both labeled and unlabeled sam-ples:t i u=semiT ree(X i l,X i u).

7:Compute the OOBE:e i u=oobe(t i u,X l?X i l). 8:if e i l>e i u then

9:F=F∪t i u.

10:else

11:F=F∪t i l.

12:end if

13:end for

14:Return F.

6.Experiment and Analysis

We compared the proposed semi-supervised splitting with different splitting criteria on typical machine learning tasks.We show that by introducing abundant unlabeled da-ta,obvious accuracy improvement can be achieved.We also applied the proposed semi-supervised splitting RF for ob-ject categorization and image segmentation.Our method achieves state-of-the-art categorization performance on the Caltech-101dataset[15]and segmentation performance on the MSRC dataset[8].

6.1.Data Classi?cation

To quantitatively evaluate the improvement over the tra-ditional splitting criteria,we test our method on the Satim-age and Pendigits datasets.The Satimage dataset has 4435training samples and 2000testing samples while the Pendigits dataset has 7494training samples and 3498test-ing samples.We implement Breiman’s Random Forests [4]with the four different splitting criteria,i.e.,information gain,normalized information gain,Gini index and Bayesian classi?cation error.The traditional versions of these criteria are used as baselines.The proposed semi-supervised split-ting was applied to the four criteria.For each dataset,we randomly chose a part of the training data as the labeled da-ta and left the remainder as unlabeled data.The ratios of the chosen data range from 0.01to 0.1.The weights of the labeled samples were set at 1.0while the weights of the un-labeled samples were set through cross-validation.We built the RF with 100trees and 10hyperplanes were random-ly generated as candidates in the internal node of RF.To avoid computation cost in testing,only two attributes were considered in each hyperplane,and the RF was constructed without pruning.The training-testing procedures were re-peated 10times.We show the comparison results in Figure 3and it is clear that substantial performance improvement is achieved as a result of using our method.

40

80120

160200240280320360400

0.76

0.780.80.820.840.860.880.9Training Samples

A c c u r a c y Information gain

Normalized information gain Gini index

Bayesian classification error

Semiísupervised information gain

Semiísupervised normalized information gain Semiísupervised Gini index

Semiísupervised Bayesian classification error (a)

72

144

216

288

360432504576

648

720

0.76

0.780.80.820.840.860.880.90.920.940.96Training Samples

A c c u r a c y Information gain

Normalized information gain Gini index

Bayesian classification error

Semiísupervised information gain

Semiísupervised normalized information gain Semiísupervised Gini index

Semiísupervised Bayesian classification error (b)

Figure 3.The classi?cation accuracy of Random Forests with tra-ditional splitting criteria (dashed lines)and the proposed semi-supervised splitting (solid lines).The results on the Satimage dataset and the Pendigits dataset are shown in (a)and (b)respec-tively.

We also compared the proposed semi-supervised split-ting RF with the state-of-the-art semi-supervised and super-vised classi?ers:RF [4],TSVM [13],SVM [6]and DAS-RF [14].The comparisons are shown in Figure 4.It can

be seen that the proposed method performs the best among them.

40

80

120

160

200240280320

360

400

0.76

0.780.80.820.840.860.880.9Training Samples

A c c u r a c y SVM TSVM DASíRF RF Ours

(a)

72

144

216

288

360432504576

648

720

0.80.820.840.860.880.90.920.940.96Training Samples

A c c u r a c y SVM TSVM DASíRF RF Ours (b)

Figure 4.The classi?cation accuracy of the proposed method with state-of-the-art classi?ers.The results on the Satimage dataset and the Pendigits dataset are shown in (a)and (b)respectively.

6.2.Object Categorization

We used the Caltech-101dataset for the object cate-gorization experiment.The Caltech-101dataset is com-posed of 101categories.Each class contains 31to 800im-ages;most of the images are medium resolution,i.e .about 300×300pixels.Following the common experiment setup,we randomly chose 15images per category as the labeled training data and 15images as the unlabeled training data.The remaining images were used for testing.We followed the ScSPM approach [23]in which each image was repre-sented as a 21504-dimension feature vector.PCA was then applied to the resulting vector.Maintaining 99.5%ener-gy,we obtained a 4000-dimensional feature vector for each image.We constructed a RF with 100trees and used 100candidate hyperplanes in the internal node.The weights of the unlabeled data were set at 0.5while the weights of the labeled data were set at 1.0.Normalized information gain is used as the splitting criterion to construct the RF.The proposed method and the traditional RF achieved 69.3%and 64.4%categorization accuracy respectively while the accuracy of SVM was 65.8%.Using the same features,our method brought an improvement of 4.9percent over the tra-ditional RF and 3.5percent over the SVM,by introducing abundant unlabeled data.

6.3.Image Segmentation

We applied the proposed semi-supervised splitting RF for the task of image segmentation in the 9-class MSRC

dataset.The9classes are:building,grass,tree,cow,sky, aeroplane,face,car and bicycle.We randomly chose150 images as the labeled training data and150images as the unlabeled training data from a total of480images,leaving the remainder as the testing data.We used the SLIC[1]to over-segment each image into200superpixels.The label of a superpixel was assigned as the majority of the pixel-level ground truth.We used the9-dimensional color momen-t,48-bins histogram of RGB,9-bins histogram of gradient [25]and59-bins histogram of LBP as the features.We con-structed a RF with100trees,each having a maximum depth of15.During splitting,10hyperplanes were randomly gen-erated as candidates and the hyperplane that maximized the information gain was chosen.We set the weight of a la-beled superpixel at1.0and set the weight of an unlabeled superpixel at0.3through cross-validation.Similar to most popular segmentation approaches,we applied a CRF stage after having learnt the class posteriors of the superpixels. The class posteriors from RF were used as unary potentials and pairwise potentials were de?ned over adjacent super-pixels.The parameters of the CRF model were trained us-ing the same150labeled training data used to construct the RF.When testing,the alpha-expansion graph-cut algorithm was used to infer the CRF model.We show the segmenta-tion results of the proposed semi-supervised splitting RF in Figure5.The original images and the ground truths are also shown.The segmentation accuracy of the proposed method and traditional RF is87.5%and83.6%respectively.As can be seen,our method is more accurate than the alternative method.

7.Conclusion and Future Work

In this paper,we introduced a semi-supervised splitting method that uses abundant unlabeled data to guide the node splitting of random forests.We derived a nonparametric al-gorithm to estimate the categorical distributions of the inter-nal nodes such that an accurate quality measure of splitting can be obtained.Our method can be combined with many popular splitting criteria,and the experimental results show that it brings obvious performance improvements to all of them.

In the future,we would like to investigate the problem of constructing RFs without labeled data.A uni?ed splitting framework that can handle both labeled and unlabeled data would be the extension. ACKNOWLEDGEMENTS

This work was supported in part by the Nation-al Natural Science Foundation of China(61170142), by the National Key Technology R&D Program under Grant(2011BAG05B04),by the Program of Internation-al S&T Cooperation(2013DFG12841),and by the Fun-damental Research Funds for the Central Universities (2013FZA5012).M.Song is the corresponding author. Appendix A

We consider four usual choices:information gain,nor-malized information gain,Gini index and Bayesian classi?-cation error.

Information Gain:

The information gain is de?ned as the subtraction of en-tropies before and after splitting

△H(R,W,θ)=H(R)?

∣R l∣

∣R∣

H(R l)?

∣R r∣

∣R∣

H(R r),(18)

where R is an internal node,R l and R r are its left and right child respectively.H(R)is the Shannon entropy of R

H(R)=?

K

∑

k=1

p k log p k,(19)

where p k is the probability distribution of the k t?classes in R.

Normalized Information Gain:

The normalized entropy gain is de?ned as the quotient of the information gain and a normalized factor

△N(R,W,θ)=

△H(R,W,θ)

?

(

∣R l∣

∣R∣

log∣R l∣∣R∣+∣R r∣∣R∣log∣R r∣∣R∣

).(20)

Gini Index:

The Gini index is a measure of the impurity of a node and its de?nition is

G(R)=

K

∑

k=1

p k(1?p k).(21)

When used for qualifying splitting,the following func-tion should be maximized

△G(R,W,θ)=G(R)?

∣R l∣

∣R∣

G(R l)?

∣R r∣

∣R∣

G(R r).(22)

Bayesian Classi?cation Error:

The Bayesian classi?cation error of a node is de?ned as

C(R)=1.0?argmax

k∈1???K

p k.(23)

When used for qualifying splitting,the following func-tion needs to be maximized

△C(R,W,θ)=C(R)?

∣R l∣

∣R∣

C(R l)?

∣R r∣

∣R∣

C(R r).(24)

From the above discussion,it is noticeable that all the four criteria heavily depends on the estimation of the prob-ability distribution p k.

Figure5.The original images,ground truths,the proposed segmentation results and traditional RF-based segmentation results on the MSRF dataset are shown from the top to the bottom row.The black area in the ground truth images is not labeled.

References

[1]R.Achanta, A.Shaji,K.Smith, A.Luchi,P.Fua,and

S.S¨u sstrunk.Slic superpixels compared to state-of-the-art

superpixel methods.IEEE Trans.Pattern Anal.Mach.Intel-

l.,34:2274–2282,2012.

[2]M.Belkin,P.Niyogi,and V.Sindhwani.Manifold regular-

ization:A geometric framework for learning from labeled

and unlabeled examples.JMLR,7:2399–2434,Dec.2006.

[3]L.Breiman.Out-of-bag estimates.Technical report,1966.

[4]L.Breiman.Random forests.Machine Learning,45:5–32,

2001.

[5]L.Breiman,J.Friedman,R.Olshen,and C.Stone.Classi?-

cation and regression trees.CA:Wadsworth Int.,Belmont,

1984.

[6] C.-C.Chang and C.-J.Lin.Libsvm:a library for support

vector machines.ACM Trans.Intell.Syst.Tech.,2,2011.

[7]O.Chapelle,B.Sch¨o lkopf,and A.Zien.Semi-supervised

Learning.The MIT Press,Cambridge,Massachusetts,

Lodon,England,2006.

[8] A.Criminisi.Microsoft research cambridge object recogni-

tion image dataset.version1.0,2004.

[9]R.Duda and P.Hart.Pattern Classi?cation and scene anal-

ysis.Wiley-Interscience,1973.

[10]P.Geurts,D.Ernst,and L.Wehenkel.Extremely randomized

trees.Machine Learning,63:3–42,2006.

[11]T.Ho.The random subspace method for constructing de-

cision forests.IEEE Trans.Pattern Anal.Mach.Intell.,

20:832–844,Aug.1998.

[12]L.Hya?l and R.Rivest.Constructing optimal binary deci-

sion trees is https://www.sodocs.net/doc/1c18405958.html,rmation Processing Letters,

5:11–17,1976.

[13]T.Joachims.Transductive inference for text classi?cation

using support vector machines.Proc.ICML,pages200–209,

1999.

[14] C.Leistner,A.Saffari,J.Santner,and H.Bischof.Semi-

supervised random forests.Proc.ICCV,pages506–513,

Sept.2009.

[15] F.-F.Li,R.Fergus,and P.Perona.Learning generative visual

models from few training examples:an incremental bayesian

approach tested on101objct categories.Proc.CVPR Work-

shop on Generative-Model Based Vision,2004.

[16]H.Payne and W.Meisel.An algorithm for constructing opti-

mal binary decision trees.IEEE https://www.sodocs.net/doc/1c18405958.html,put.,C-26:905–

516,Sept.1977.

[17]J.Quinlan.Induction of decision trees.Mach.Learn.,1:81–

106,Mar.1986.

[18]J.Quinlan.C4.5:Programs for machine learning.Morgan

Kaufmann Publishers,1993.

[19] E.Rounds.A combined non-parametric approach to feature

selection and binary decision tree design.Pattern Recogni-

tion,12:313–317,1980.

[20] B.Silverman.Density Estimation for Statistics and Data

Analysis.Chapman and Hall/CRC,Apr.1986.

[21] C.Suen and Q.Wang.ISOETRP-an interactive clustering

algorithm with new objectives.Pattern Recognition,17:211–

219,1984.

[22] C.Wu,https://www.sodocs.net/doc/1c18405958.html,ndgrebe,and P.Swain.The decision tree ap-

proach to classi?cation.School Elec.Eng.,Purdue Univ.,

Lafayette,IN,Rep.RE-EE75-17,1975.

[23]J.Yang,K.Yu,Y.Gong,and T.Huang.Linear spatial pyra-

mid matching using sparse coding for image classi?cation.

Proc.CVPR,pages1794–1801,2009.

[24]Y.Yang,F.Nie,D.Xu,J.Luo,Y.Zhuang,and Y.Pan.A

multimedia retrieval framework based on semi-supervised

ranking and relevance feedback.IEEE Trans.Pattern Anal.

Mach.Intell.,34:723–742,2012.

[25]L.Zhang,M.Song,Z.Liu,X.Liu,J.Bu,and C.Chen.

Probabilistic graphlet cut:Exploiting spatial structure cue

for weakly supervised image segmentation.Proc.CVPR,

2013.

[26]T.Zhang and F.Oles.A probability analysis on the value

of unlabeled data for classi?cation problems.Proc.ICML,

pages1191–1198,2000.

混凝土规范相关问题专家答疑整理版

混凝土规范相关问题专家答疑整理版 问题①:混凝土规范4.2.3条:横向钢筋用作受剪、受扭、受冲切承载力计算时,其数值大于360N/mm2时应取360N/mm2。 可是我在看朱总2013年真题解析时,箍筋HRB500,可是计算时取fyv=435N/mm2 是我规范理解错了吗?往曾工指点迷津 采纳回答:(曾晓峰) 1.[规范]就是[规定],如其它无更专业的柤关规定与之矛盾,和规范自身无明显错误外,都应以它(规范)为准. 2.例:[假如]混凝土规范4.2.3条:"横向钢筋用作受剪、受扭、受冲切承载力计算时,其数值大于[300N/mm2时应取300N/mm2。]在规范未修改时,谁能说它错?且都应无条件执行,且该条还是[强条]. ②结构的层间刚度比,抗剪承载力需要满足抗震规范的不?(未解决,等待高手在线解答) ③抗规5.2.5条文说明: “扭转效应明显与否一般可由考虑耦联的振型分解反应谱法分析结果判断,例如前三个振型中,二个水平方向的振型参与系数为同一个量级,即存在明显的扭转效应。” 这句话应该怎样理解呢?(未解决,等待高手在线解答) ④高规“大底盘多塔结构”的疑问 2.1.15 未通过结构缝分开的裙房上部具有两个或者两个以上塔楼的结构。

条文说明:一般情况下,在地下室连为整体的多塔楼结构可不作为本规程10.6节规定的复杂结构。 10.6.3.4 大底盘多塔结构,可按本规程第5.1.14条规定的整体和分塔计算模型分别验算整体结构和各塔楼结构扭转为主的第一周期与平动为主的第一周期的比值,并应符合本规程第3.4.5条的有关要求。 请问 1:对于仅三面有土的地下室,上部两个塔楼用结构缝分开,地下室不分缝,这个是复杂结构,需要满足10.6.3.4对吧? 2:对于1的多塔楼结构需要整体建模和分塔建模分别计算,周期比和位移比都满足要求,那其他参数呢?是哪个模型满足还是都满足?配筋取包络吗? 3:对于1的的多塔结构,整体建模就是把整个模型按实际建吧,那分塔建模呢?是指分几个模型建还是在整体模型中定义多塔? 采纳解答:(曾晓峰) 1.对于仅三面有土的地下室,上部两个塔楼用结构缝分开,地下室不分缝,应属于大底盘多塔结构。因当地震水平力从无土的对方作用而来时,在该方向等于无地下室,该地下室相当于通常情况下的裙房。 2. A.多塔楼结构需要整体建模和分塔建模分别计算和相关要求可参《全国民用建筑措施》(混凝土) 12.5.3较为详细。

公路工程造价专家答疑(一)

公路工程造价专家答疑(一) 1、问:预算2-3-4-4、2-3-5-1、2-3-6-2中,如果只计算铺砌或安砌工作,没有预制工程时(预制块外购成品)如何调整定额? 答:取消砼和搅拌机数量.2-3-4-4:人工调整为18.87,其他材料费减去20.13元;2-3-5-1:人工调整为11.02,其他材料费减去27.93元;2-3-6-2:人工调整为11.55,其他材料费减去26.32元. 2、问:在清单细目单价中已按定额取费系数计取了临时设施费和企业管理费,在清单第100章中还可以再计取承包人驻地建设费用吗?这两部分费用是否有重复的地方? 答:临时设施费已包括承包人驻地建设.如在清单100章中计取该项费用,应核减临时设施费费率.3、问:在工程量清单中有一项“减速带拆除及恢复”,其中恢复一项我套用的是6-1-9路面标线的项,但是在定额中找不到拆除一项的依据,请问减速带的拆除应该套用预算定额中的哪个项?答:定额未编制减速带,套用标线也不合理.建议参考其他相关定额或询价. 4、问:07新定额中,并没有SNS柔性防护的部分,而在边坡防护中,应用已越来越多,预算如何套用?目前在做的一个主动型单层柔性防护网项目(TECCO格栅网),比较工艺材料相似性,是否可做 如下处理:锚杆埋设和挂网套用定额5-1-8,另单独增加安装锚锭板项目? 答:可按类似项目套用. 5、问:当定额消耗量与实际消耗量不同时,做预算单价时,能否将定额消耗的人、材、机消耗调整为实际的人、材、机消耗? 答:概算和预算定额是控制工程投资的依据,各定额消耗量与实际发生情况会有所不同。因此,除定额允许调整项目外,一般不能进行调整.如人、材、机出现差距较大的情况,对费用影响较大,可编制补充定额。 6、问:对隧道洞身开挖的提问:定额中的隧道长度是指全隧道,但施工中往往是从两端分别施工,请问套用隧道长度是否还按全隧道计算。 答:按隧道全长计算. 7、问:公路建设中人工,建材,征地各成本占总成本的比率大致是多少? 答:特殊项目的含量与一般项目有所不同,如跨海大桥、超大跨径技术复杂大桥及国外项目等。一般项目占建安费比例约为:人工费15%,材料费55%,机械费30%。征地拆迁各地单价差异较大,与项目地形、地貌、路线等级等也有关系,因此变化较大,没有规律性。 8、问:新定额片石砼标号最高只有C20,使用中经常会用C25片石砼,该如何进行抽换调整答:由于C25片石砼较少见,定额没有编制C25片石砼配合比,因此无法精确抽换。近似抽换可以将定额片石砼减10.2m3,增加C25砼8.67 m3(C25也只有4cm碎石配比)。如果你有C25片石砼的配比资料,可自行抽换。

一个网站完整详细的SEO优化方案

首先,前端/页编人员主要负责站内优化,主要从四个方面入手: 第一个,站内结构优化 ?合理规划站点结构(1、扁平化结构2、辅助导航、面包屑导航、次导航) ?内容页结构设置(最新文章、推荐文章、热门文章、增加相关性、方便自助根据链接抓取更多内容)?较快的加载速度 ?简洁的页面结构 第二个,代码优化 ?Robot.txt ?次导航 ?404页面设置、301重定向 ?网站地图 ?图片Alt、title标签 ?标题 ?关键词 ?描述 ?关键字密度 ?个别关键字密度 ?H1H2H3中的关键字 ?关键字强调 ?外链最好nofollow ?为页面添加元标记meta ?丰富网页摘要(微数据、微格式和RDFa) 第三个,网站地图设置 ?html网站地图(1、为搜索引擎建立一个良好的导航结构2、横向和纵向地图:01横向为频道、栏目、专题/02纵向主要针对关键词3、每页都有指向网站地图的链接) ?XML网站地图(sitemap.xml提交给百度、google) 第四个,关键词部署 ?挑选关键词的步骤(1、确定目标关键词2、目标关键词定义上的扩展3、模拟用户的思维设计关键词4、研究竞争者的关键词) ?页面关键词优化先后顺序(1、最终页>专题>栏目>频道>首页2、最终页:长尾关键词3、专题页:【a、热门关键词b、为热点关键词制作专题c、关键词相关信息的聚合d、辅以文章内链导入链接】4、栏目页:固定关键词5、频道页:目标关键词6、首页:做行业一到两个顶级关键词,或者网站名称)

?关键词部署建议(1、不要把关键词堆积在首页2、每个页面承载关键词合理数目为3-5个3、系统规划) 然后,我们的内容编辑人员要对网站进行内容建设,怎样合理的做到网站内部优化的功效?这里主要有五个方面: 第一个,网站内容来源 ?原创内容或伪原创内容 ?编辑撰稿或UGC ?扫描书籍、报刊、杂志 第二个,内容细节优化 ?标题写法、关键词、描述设置 ?文章摘要规范 ?URL标准化 ?次导航 ?内页增加锚文本以及第一次出现关键词进行加粗 ?长尾关键词记录单 ?图片Alt、titile标签 ?外链最好nofollow ?站长工具(百度站长工具、google管理员工具等)的使用 ?建立反向链接 第三个,关键词部署 ?挑选关键词的步骤(1、确定目标关键词2、目标关键词定义上的扩展3、模拟用户的思维设计关键词4、研究竞争者的关键词) ?页面关键词优化先后顺序(1、最终页>专题>栏目>频道>首页2、最终页:长尾关键词3、专题页:【a、热门关键词b、为热点关键词制作专题c、关键词相关信息的聚合d、辅以文章内链导入链接】4、栏目页:固定关键词5、频道页:目标关键词6、首页:做行业一到两个顶级关键词,或者网站名称) ?关键词部署建议(1、不要把关键词堆积在首页2、每个页面承载关键词合理数目为3-5个3、系统规划) 第四个,内链策略 ?控制文章内部链接数量 ?链接对象的相关性要高 ?给重要网页更多的关注 ?使用绝对路径

哪个杀毒软件最好用

哪个杀毒软件最好用 篇一:八款杀毒软件横向评测系统资源占用篇 八款杀毒软件横向评测:系统资源占用篇 作者:朱志伟责任编辑:刘晶晶无 CBSi中国·ZOL 09年09月07日 [评论25条] 杀毒软件的资源占用情况一直颇受用户关注,如果占用过高,会影响到实际的运行速度和整体效果,所以,很多用户也将资源占用的高低算作了重要的考核手法,而目前8款主流杀毒软件中,整体的资源占用情况如何呢?我们就以在检测过程中的实际数值情况,进行一番横向对比。 经过测试,我们分别截取包括瑞星全功能安全软件2010版、卡巴斯基2010、ESET NOD32 版本等软件在启动和扫描过程中的资源占用情况,对其进行深度对比。看哪款软件的资源占用更低,更加适合用户使用。 1.在启动过程中的资源占用情况 下面则是各款杀毒软件产品在启动过程中的资源占用情况: 图1 八款杀毒软件在静态模式下的资源占用情况 2.在动态模式下的资源占用情况: 为详细测试杀毒软件在动态模式下的资源占用情况,我们特别对其进行了多次取值,并每次截取5组数值,下面则是详细的评测数据和图表信息。

图2 8款杀毒软件在动态模式下的资源占用情况 动态模式下的资源占用总表: 图3 8款主流杀软在动态模式下的资源占用情况 编辑点评: 从实际数据我们可以看出,瑞星杀毒软件、金山毒霸和熊猫全功能安全软件在动态模式下的资源占用相对较低,特别是来自国外的熊猫全功能安全软件,更是相对平稳,整体数据非常理想。 篇二:国产最好的杀毒软件排名 国产最好的杀毒软件排行榜(个人评价) 第1名:新毒霸(悟空) 永久免费的金山毒霸今天对外发布新一代产品:新毒霸(悟空),该产品是金山毒霸2012的升级换代版,而且永久免费。 新毒霸(悟空) 毒霸(悟空)实现了“全平台、全引擎、全面网购保护”的新一代安全矩阵,依然延续了轻巧不卡机的特点,安装包大小不到10MB,系统占用低。 第2名:瑞星杀毒 瑞星杀毒软件是一款基于瑞星“云安全”系统设计的新一代杀毒软件。其“整体防御系统”可将所有互联网威胁拦截在用户电脑以外。 深度应用“云安全”的全新木马引擎、“木马行为分析”和“启发式扫描”等技术保证将病毒彻底拦截和查杀。再结合“云安全”系统的自动分析处理病毒流程,能第一时间极速将未知病毒的解决方案

SSD固态硬盘优化设置图文教程

SSD固态硬盘优化设置图文教程 一、开启AHCI 优化SSD的第一步首先就是要确保你的磁盘读写模式为AHCI,一般来讲如果你的电脑是windows7系统,只需要在安装系统前进入BIOS设置下磁盘读写模式为“AHCI”即可,如果您已经安装过windows7,并且不确定是否磁盘工作在AHCI 模式,那么请看下面的教程: 1、点击win+R,进入运行对话框。 2、输入Regedit进入注册表。 3、选择路径“HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\msa hci”。 4、双击右侧的Start,将键值修改为“0”,重启即可。 二、更新您的芯片组驱动程序 保持最新的芯片组驱动有利于提高系统的兼容性和改善磁盘读写能力,尤其是现在SSD更新速度比较快,随时更新磁盘芯片组是非常有必要的。 Trim是一个非常重要的功能,它可以提高SSD的读写能力,减少延迟。这是wi n7支持的一项重要技术,需要通过升级来支持。 通过以上两个驱动就可以开启TRIM模式了,很多检测软件都能够看到,当删除数据后,磁盘在后台会进行TRIM操作。 三、关闭系统还原 这是一个非常重要的步骤,最近很多反馈表明系统还原会影响到SSD或者TRIM 的正常操作,进而影响SSD的读写能力。 1、右键单击我的电脑选择属性 2、选择系统保护 3、设置项

4、关闭系统还原 四、开启TRIM 确认TRIM是否开启一直是一个比较困难的事情,因为我们必须非常密切的监控电脑的状态才能判断,而往往磁盘TRIM都是瞬间的事,所以很难察觉。我们可以通过如下方式来看TRIM是否开启成功: 1、打开CMD 2、右键点击CMD以管理员模式运行 3、输入“fsutil behavior query DisableDeleteNotify” 4、如果返回值是0,则代表您的TRIM处于开启状态 5、如果返回值是1,则代表您的TRIM处于关闭状态

公路工程造价专家答疑100问(二)

公路工程造价专家答疑(二) 101、<公路工程预算定额》中4-9-3中关于钢支架,10平米立面积中的立面积在定额释义中指的是净跨径与净墩台高度的乘积吗?那在编制定额时要不要考虑桥梁宽度呢? 答:支架高度为实际搭设的高度,宽度调整参见P628说明第一条. 102、混凝土配合比定额中的“中(粗)砂”是指的“中砂”或者“粗砂”均可,还是指“中粗砂”这种材料。 答:包括中砂和粗砂 103、新定额“2-1-9机械铺筑厂拌基层稳定土混合料”是按每层铺筑厚度为多少编制的定额?如果铺筑厚度不同,应如何调整?还有,如果要进行分层碾压的话,“摊铺机”的消耗是否也要加倍呢?定额说明中好像只写到了拖拉机、平地机和压路机的台班消耗加倍计算。 答:铺筑不涉及材料消耗,与厚度无关,仅考虑压实机械的加倍. 104、旧水泥砼路面打裂稳压怎样套定额,如果没有,能提供一个门板式打裂,轮胎压路机碾压的补充定额吗? 答:尚无定额可套,我们现还没有相关资料. 105、上部结构中的现浇混凝土墙式护栏套用什么定额合适? 答:6-1-2 106、2007新编制办法第一章第六条“公路管理、养护及服务房屋应执行工程所在地的地区统一定额及相应的直接费及间接费定额”,但其他费用应按恩办法中的项目划分几计算方法编制。“问题:1、房屋工程中的利润和税金是不是按照公路专业工程的费率标准和计算方法来计算。 2、公路造价中工程定额测定费是放在建设项目其他费用中的,而房建预算该项费用一般是放在规费中的,那么编制公路工程中的房建预算时是不是应该不列房建预算的该项费用。 答:1、房建工程统一执行当地发布的定额和编制办法,包括利润。税金为国家统一标准,执行原则应没有差别。2、本项费用无论原在哪里列支,现均不再计列。 107、“当运距超过第一个定额运距单位时,其运距尾数不足一个增运定额单位的半数时不计,超过半数时按一个增运定额运距单位计算。”这个规定是仅适用于章节前有说明的定额,还是适用于全部的运输定额?如第9章的材料运输没有说明,是否也适用这条? 答:仅适用于章节有说明的定额,即运距有划分步距的定额. 108、预算定额第2页“路基土石方工程”说明的第5条:5.……当运距超过第一个定额运距单位时,其运距尾数不足一个增运定额单位的半数时不计,超过半数时按一个增运定额运距单位

nod32激活码删除

一劳永逸地激活NOD32,从此不需要NOD32激活码 一、永久激活,一劳永逸 请不要迷上nod32激活码,非得要它,激活码只是一个传说。(激活nod32就是让开机界面它消失而已无其它任何意义)解决方法如下(下面的方法选择一个即可,如果选择了一个方法,第二个自然没法选择了,因为有一个设定就可以把启动项的参数改掉,下一个方法自然找不到选项了。设定后重启立刻见效) (1):可以用360——软件管家——开机加速——“ESET安全类型软件的激活和启动向项”选禁止。 (2):开始——运行msconfig——确定——启动——关闭它的随机启动项后面的一个e ssact,(或带exe) C:\Program Files\ESET\ESET NOD32 Antivirus\\esetact\essact.exe 重启,提示打钩——确定。 (3).点开始——运行——输入REGEDIT——确定——依次打开HKEY_LOCAL_MACHI NE——SOFTWARE——microsoft——WINDOWS——CURRENTVERSION——点击R UN——删除右面ESSACT——关闭。 二、更新 1打开NOD32——更新——用户名和密码设置——添加用户名:(请到NOD32分享区找最

新用户名及密码输入)——病毒库更新——确定。 2防护状态如果是黄色的,就关闭更新。 设置——(切换至高级——是——)显示所有高级设置——系统更新——点重大更新——选择无更新——确定——点防护状态——此处——运行系统更新——请通过Windows u pdate服务器获得补丁更新——关闭下载的网页。 或点防护状态——此处——安装——立即安装-等待安装完成关闭、变绿了吧。

(环境管理)环境下使用SSD固体硬盘的优化设置方法

windows 7 下安装 SSD 固态硬盘的优化设置方法 一、确定你的电脑运行在AHCI模式 优化SSD的第一步首先就是要确保你的磁盘读写模式为AHCI,一般来讲如果你的电脑是windows7系统,只需要在安装系统前进入BIOS设置下磁盘读写模式为“AHCI”即可,如果您已经安装过windows7,并且不确定是否磁盘工作在AHCI模式,那么请看下面的教程: ▲AHCI 1、点击win+R,进入运行对话框。 2、输入Regedit进入注册表。 3、选择路径 “HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\msahci”。 4、右键修改磁盘模式为“0”,重启即可。 二、更新您的芯片组驱动程序 保持最新的芯片组驱动有利于提高系统的兼容性和改善磁盘读写能力,尤其是现在SSD更新速度比较快,随时更新磁盘芯片组是非常有必要的。 Trim是一个非常重要的功能,它可以提高SSD的读写能力,减少延迟。这是win7支持的一项重要技术,需要通过升级来支持。 通过以上两个驱动就可以开启TRIM模式了,很多检测软件都能够看到,当删除数据后,磁盘在后台会进行TRIM操作。

三、关闭系统恢复功能 这是一个非常重要的步骤,最近很多反馈表明系统还原会影响到SSD或者TRIM的正常操作,进而影响SSD的读写能力。 ▲关闭系统还原 1、右键单击我的电脑选择属性 2、选择系统保护 3、设置项 4、关闭系统还原 四、核实TRIM是否开启 确认TRIM是否开启一直是一个比较困难的事情,因为我们必须非常密切的监控电脑的状态才能判断,而往往磁盘TRIM都是瞬间的事,所以很难察觉。我们可以通过如下方式来看TRIM是否开启成功:

整理的CDE专家的解答汇总---丁香园整理

1、对已有国家标准的药品,如申请人对该标准进行了完善,并获得注册标准,请问上市后其它部门(如药检所不知道该注册标准),如何执行? 答:对已有国家标准的药品,申请人可以根据实际情况对国家标准进行提高完善;上市后,其它部门(如药检所)可以根据品种的文号,查询该品种执行的是国家标准还是注册标准,如执行注册标准,药检部门可通过一定方式得到该质量标准。当然企业也可主动告知相关部门该品种的执行标准情况。 2、药品生产中使用了较多的二类溶剂,经对大生产的数批产品证明,工艺中使用的有机溶剂已检不出,是否在申报生产的质量标准中保留对这些有机溶剂残留的检查? 答:在药品生产中使用的二类溶剂,经过对大生产的数批产品证明,如工艺中使用的有机溶剂已检不出,在申报生产时,质量标准可不保留这些有机溶剂残留的检查,但应提供较为充分的数据积累的结果。 3、有关物质检查时,辅料在HPLC图谱中有峰,按不加校正因子的自身对照品法计算约为0.1%,有关物质的限度为1.0%,若用辅料空白进行扣除时,是扣辅料峰保留时间相同的峰?还是扣除空白辅料峰的面积? 答:应该扣除空白辅料峰的面积。此种方法适用性较差,最好进一步完善方法,避免辅料的干扰。 4、对于国外尚处于I期临床阶段的新药,其质量标准往往只对含量进行规定,而对有关物质等其它项目均无规定。(1)对这类情况下的新药,在我国申请I期和临床试验的批准,CDE 如何评价其质量标准?(2)只有一批样品是否可以? 答:国外尚处于I期临床阶段的新药,其质量标准并不只对含量进行规定,对有关物质则进行更加全面的研究。国内对这类新药的质量标准,在批准I期和临床试验的时候,在保证质量可控、安全可控的前提下,有些问题是可以在临床期间完善的,但对于与安全性相关的指标(有关物质、有机残留、杂质检查等),在批准临床前必需做到安全、可控。只进行含量研究是不可行的。 鉴于与国外研究相比,目前国内相关方面的研究明显不足,在进行质量研究时,应至少采用三批样品进行研究。 5、某一原料药标准规定,含量〉96%,有关物质(TLC法)不大于8%,现在仿制过程中拟改为HPLC法测定,含量〉96%,有关物质(HPLC法)是否必需不大于4%;或更宽?制剂过程中有关物质有可能增加,其有关物质可否放宽? 答:若在仿制过程中原料药拟改为HPLC法测定,含量〉96%,有关物质(HPLC法)应该不大于4%;该限度不能随意放宽,应以规范的质量对比研究为依据,以确定其限度。制剂的有关物质应根据被仿品种的有关物质和自身产品长期稳定性试验数据进行确定,一般其杂质数量和总量均不能多于被仿制药。 6、某一化合物内含2分子结晶水,已有国家标准,其含1分子结晶水化合物性状与2分子结晶水的性状不同,可否按新药申报含1分子结晶水的化合物,同时按几类药申报? 答:按现行法规,目前含1分子结晶水化合物不能按新药申报。 7、请介绍新抗生素标准品标化方法。 答:请咨询该专业相关专家(如中检所相关部门)。 8、2005年版药典中个别品种有关物质检查采用HPLC法,对相对保留时间提出了要求,主要目的是什么? 答:2005年版药典中个别品种有关物质检查采用HPLC法,并对相对保留时间提出了要求,目的在于对已知杂质进行控制,与辅料等其它色谱峰区分开,更好的控制质量。 9、指导原则中提及能证明已上市品种辅料及生产工艺一致的,可免相关研究;事实上目前几乎无法获得上市品种完整的原辅料及工艺资料,有什么办法可以提供上述证明或者以此进

2011专家答疑第六期(建筑垃圾外运解释)

2011专家答疑第六期(建筑垃圾外运解释) ――摘自四川省2011年9期价格信息 问题1:天棚吊顶如纸面石膏板吊顶上安装灯具,2009定额装饰装修定额是否已经含其开孔费用,如果有安装单位开孔,是否应将装饰单位的开孔费用扣除? 答:安装工程定额未包含吊顶开孔费用。装饰工程装修定额已包含按设计要求预留孔或开孔费用,如装饰施工图中未设计预留孔洞,在装饰承包人已完成的吊顶上开孔费用另行计算。 问题2: 2009定额或者安全文明施工费中是否包含了施工过程产生的建筑垃圾的清理、外运费用? 答:2009定额和安全文明施工费中已包含施工过程中产生的建筑垃圾清理费用,但未包含施工过程中产生的建筑垃圾外运费用。 问题3:、勘误:2009定额建筑工程916页计量单位改为“座”。 问题4:2009定额措施项目建筑工程中221页(二)综合脚手架:“……2、综合脚手架已综合考虑了砌筑、浇筑、吊装、抹灰、油漆、涂料等脚手架费用”。此处的“抹灰、油漆、涂料”是指什么? 答:2009定额措施项目T.B建筑工程说明第三条第(二)款第2小条(221页) 2、该说明中“油漆、涂料”是指2009装饰装修工程定额B.E油漆、涂料、裱糊工程中B.E.7.1涂料及B.E.8空花格、栏杆刷涂料中的定额项目。

问题5:2009定额装饰工程中的B.F.8楼地面、墙面块料面层铲除是否包含块料面层水泥砂浆基层打底(找平)的拆除? 答:2009定额装饰装修工程B.F.8拆除、铲除中的楼地面、墙面块料面层铲除未包含其水泥砂浆找平层、打底层的拆除。水泥砂浆的拆除套用2009定额装饰装修工程B.F.8拆除、铲除中水泥砂浆铲除相应定额项目。

企业完整详细的SEO优化方案

一个网站完整详细的SEO优化方案 一个完整的SEO优化方案主要由四个小组组成: 一、前端/页编人员 二、内容编辑人员 三、推广人员 四、数据分析人员 一个网站完整详细的SEO优化方案 接下来,我们就对这四个小组分配工作。 首先,前端/页编人员主要负责站内优化,主要从四个方面入手: 第一个,站内结构优化 合理规划站点结构(1、扁平化结构2、辅助导航、面包屑导航、次导航) 内容页结构设置(最新文章、推荐文章、热门文章、增加相关性、方便自助根据链接抓取更多内容) 较快的加载速度 简洁的页面结构 第二个,代码优化 Robot.txt 次导航 404页面设置、301重定向 网站地图 图片Alt、title标签 标题 关键词 描述 关键字密度 个别关键字密度 H1H2H3中的关键字 关键字强调 外链最好nofollow 为页面添加元标记meta 丰富网页摘要(微数据、微格式和RDFa) 第三个,网站地图设置

html网站地图(1、为搜索引擎建立一个良好的导航结构2、横向和纵向地图:01横向为频道、栏目、专题/02纵向主要针对关键词3、每页都有指向网站地图的链接) XML网站地图(sitemap.xml提交给百度、google) 第四个,关键词部署 挑选关键词的步骤(1、确定目标关键词2、目标关键词定义上的扩展3、模拟用户的思维设计关键词4、研究竞争者的关键词) 页面关键词优化先后顺序(1、最终页>专题>栏目>频道>首页2、最终页:长尾关键词3、专题页:【a、热门关键词b、为热点关键词制作专题c、关键词相关信息的聚合d、辅以文章内链导入链接】4、栏目页:固定关键词5、频道页:目标关键词6、首页:做行业一到两个顶级关键词,或者网站名称) 关键词部署建议(1、不要把关键词堆积在首页2、每个页面承载关键词合理数目为3-5个3、系统规划) 然后,我们的内容编辑人员要对网站进行内容建设,怎样合理的做到网站内部优化的功效?这里主要有五个方面: 第一个,网站内容来源 原创内容或伪原创内容 编辑撰稿或UGC 扫描书籍、报刊、杂志 第二个,内容细节优化 标题写法、关键词、描述设置 文章摘要规范 URL标准化 次导航 内页增加锚文本以及第一次出现关键词进行加粗 长尾关键词记录单 图片Alt、titile标签 外链最好nofollow 百度站长工具、google管理员工具的使用 建立反向链接 第三个,关键词部署 挑选关键词的步骤(1、确定目标关键词2、目标关键词定义上的扩展3、模拟用户的思维设计关键词4、研究竞争者的关键词) 页面关键词优化先后顺序(1、最终页>专题>栏目>频道>首页2、最终页:长尾关键词3、

ESET NOD32阻止上网问题处理

ESET NOD32防病毒360专用版-安装后无法上网的解决方法 激活码:M263-0233-3S5S-JKHB-J6Q5-74YH 安装了ESETNOD32防病毒360专用版后没有办法浏览网页,请大家按照以下方法尝试设置。 一、如果您的电脑同时安装了ESETNOD32、瑞星防火墙,有可能无法打开网页。请参考以下步骤操作即可解决冲突: 1.打开NOD32主界面,按F5键进入“高级设置”。 依次打开:Web访问保护-HTTP-Web浏览器,将“瑞星防火墙”的两个选项打叉。 瑞星防火墙的默认安装路径: C:\ProgramFiles\Rising\RFW\RegGuide.exe C:\ProgramFiles\Rising\RFW\rfwProxy.exe 2.依次打开:WEB访问防护-HTTP-WEB浏览器-主动模式,将“瑞星防火墙”的两个选项保留空白。 瑞星防火墙的默认安装路径:

C:\ProgramFiles\Rising\RFW\RegGuide.exe C:\ProgramFiles\Rising\RFW\rfwProxy.exe 3.上面的步骤设置完成后,点击“确定”即可。 二、将浏览器的选项,不要选择或者是打上叉尝试。 1.您可以双击打开ESETNOD32-点击F5进入高级设置-点击HTTP-点击WEB浏览器,查看里面是否有浏览器的选项,请您不要选择或者是打上叉尝试。

2.您可以双击打开ESETNOD32-点击F5进入高级设置-点击HTTP-点击WEB浏览器-点击主动模式-查看里面是否有浏览器的选项,请您不要选择尝试。 三、如果以上两种方法还是无法解决,在“开始”-“程序”-“附件”,打开“命令提示符”程序。然后输入netshwinsockreset回车后会重置Winsock协议,按照提示重启电脑后即可解决问题。

教你如何优化设置你的ssd固态硬盘

教你如何优化设置你的ssd固态硬盘 (山东新华电脑学院整理供稿)我们都清楚固态硬盘具有读写速度快、无噪音等优点被越来越多的人所接受,但是如果你购买了固态硬盘还得会进行适当的优化,如果没有很好地进行ssd固态硬盘优化设置不一定能发挥他的特点,那么今天学无忧就来给大家讲讲如何优化你的固态硬盘,通过这篇ssd 固态硬盘优化设置教程希望能够让你更加最优发地发挥固态硬盘特点。 一、设置磁盘读写AHCI模式 ssd固态硬盘优化设置的第一步就是我们需要对我们的磁盘读写模式设为AHCI模式,一般来讲如果你的电脑是windows7系统,只需要在安装系统前进入BIOS设置下磁盘读写模式为“AHCI”即可。 二、升级芯片组驱动程序 如果我们的芯片组驱动不是最新的,那么就有可能无法发挥ssd固态硬盘的读写能力,尤其是现在SSD更新速度比较快,随时更新磁盘芯片组是非常有必要的。 三、关闭系统恢复功能

最近很多网友反馈如果打开了系统恢复功能会影响到SSD或者TRIM的正常操作,进而影响SSD的读写能力,这样我们就有必要对系统恢复功能进行关闭。 四、核实TRIM是否开启 确认TRIM是否开启一直是一个比较困难的事情,因为我们必须非常密切的监控电脑的状态才能判断,而往往磁盘TRIM都是瞬间的事,所以很难察觉。我们可以通过如下方式来看TRIM是否开启成功,方法如下: 1、打开CMD 2、右键点击CMD以管理员模式运行 3、输入“fsutil behavior query DisableDeleteNotify” 4、如果返回值是0,则代表您的TRIM处于开启状态 5、如果返回值是1,则代表您的TRIM处于关闭状态 五、关闭磁盘索引 磁盘索引的目的是为了加速进入相应的文件夹和目录,但是SSD产品本身的响应时间非常低,仅为0.1ms级别,相比传统硬盘快了百倍之多,所以没有必要做索引,过多的索引只会白白减少SSD的寿命。 六、关闭磁盘整理计划 win7默认情况下磁盘碎片整理是关闭的,但是在某些情况下可能会自动开启。因为SSD上不存在碎片的问题,所以没有必要让它开启,频繁的操作只会减少其寿命。 七、关闭磁盘分页 这是一个广受争论的优化选项,很多人怀疑这是否有利于提高性能。但无论怎么样关闭这个选项可以为你带来额外的3.5GB-4GB的告诉存储空间。我们并不推荐少于4GB RAM的用户关闭此选项。

【干货】真菌检测中常见问题专家解答

【干货】真菌检测中常见问题专家解答 近年来,随着恶性肿瘤的高发、艾滋病的流行、化疗药物、广谱抗生素、糖皮质激素和免疫抑制剂等药物的广泛使用,以及人工导管等侵入性操作、器官移植等有创诊疗技术的应用,由真菌所引起的感染日益成为临床各科室面临的巨大挑战。为了提高临床诊断水平,加强真菌的检验能力显得至关重要,现在临床微生物实验室有关真菌检验的常见问题汇总如下,供大家学习参考。1、为什么培养要设定 35 C? 实验室通常将孵箱温度设定为 35 C,是为了防止温度的波动对细菌或真菌生长的影响。临床常见细菌的最适生长温度为33?37 C (嗜热,嗜中温菌除外,占少数),设定为35C时上下波动2C对培养无影响,如设定为37C C显然就不合适了。至于真菌培养 ,要视标本来源而定。一般怀疑浅部真菌感染的标本如皮屑、甲屑、头屑及毛发等分离培养于26?28 C。来源于人体深部组织的标本如痰、胸腹水、血及骨髓深部脓肿等建议放35 C培养,是刚离体的标本在 35 C更接近于人体温度,其次临床最常见的白念珠菌在35 C能形成更为典型的伪 足样菌落,这与血清出芽试验在 35 C做是同样的道理。同时’ 来源于35 C初分离的光滑念珠菌具有更高的酶活性。怀疑温 度型双相真菌感染或个别真菌需要较高温度生长时则需要两种温度同时培养。还要注意培养环境的湿度 ,一般要求大于 60%,如果培养箱的湿度不够 ,最好在平板附近放置盛有无菌水的容器以保证湿

度。 2、CO2 对真菌生长影响大吗 ?真菌属于异养型微生物,主要从有机化合物中获得碳源 ,而自养型微生物才需要从 CO2 中获得碳源,所以真菌一般不需要在二氧化碳环境中培养,而且CO2 对真菌的生长和繁殖均不利 ,但一定浓度可刺激孢子形成。 3、在血培养中培养出真菌 2 次,但是患儿没有临床表现,而且临床也没用抗真菌药患儿就好了,是考虑污染吗 ? 这种情况要判断是否为污染菌,调查采血过程是否规范、血瓶转运过程是否符合要求、针孔是否适当封口、转运箱是否受到污染等众多因素,最关键的还是患者的临床表现,如没有真菌感染的症状,要么考虑污染,要么考虑定植(可能性不大)。 4、我们基层医院实际工作中做阴道分泌物真菌培养,生化鉴定几乎做不了,很多直接报白念珠菌,这样合适吗?这样做不合适。目前有些检验科甚至分不清酵母菌和霉菌,在阴道白带、尿液和粪便中镜检发现念珠菌后报告为“找到霉菌”。霉菌是指丝状多细胞真菌;酵母菌是指单细胞形态的真菌,二者不可混为一谈。阴道中感染的酵母菌大多是白念珠菌,但不排除其他酵母菌。克柔念珠菌对氟康唑和5- 氟胞嘧啶天然耐药,如果遇到类似存在天然耐药的菌株,会影响后续的治疗。 5、痰标本中少量念珠菌需要在报告中体现吗?还是不报告 ? 标本要求和检验程序用要体现,临床医生会综合考虑。虽然 白色念珠菌导致肺部真菌感染的概率很小,遇到痰标本真菌培养中分离出该真菌,还是建议报告,临床医生结合影像学结果综合考虑。

NOD32启发式Heuristic

——检测未知病毒 反病毒软件除了要被动地检测出已知病毒外,还更应该主动地扫描未知病毒。 那么,反病毒软件的扫描器到底是如何工作的 呢? 信息安全研究和咨询顾问 Andrew Lee(安德鲁?李) 首席研究主管 ESET公司 本文由NOD32中国官方论坛组织翻译 https://www.sodocs.net/doc/1c18405958.html,/forum/

目录 页数简介5了解检测6 病毒 6 蠕虫7 非复制型恶意软件7 启发式究竟指什么?9 特征码扫描10 启发式的对立面11 广谱防毒技术12 我绝对肯定13 灵敏度与误报14 测试的争议16 结论:启发式技术的尴尬19参考文献21术语表22

作者简介 大卫·哈利 大卫·哈利从20世纪80年代末就开始研究并撰写有关恶意软件和其它电脑安全问题的文章。2001-2006年,他是英国国家健康中心(UK’s National Health Service)的国家基础设施安全管理人员,专门研究恶意软件和各种形式的电子邮件的滥用,负责威胁评估中心(Threat Assessment Center)的运作。2006年4月起,他从事独立作家和安全技术顾问的相关工作。 大卫的第一部重要著作是《病毒揭秘(Viruses Revealed)》(Harley,Slade,Gattiker),奥斯本的计算机病毒防护全面指南。他参与编写并审核了很多其它有关计算机安全和教育方面的书籍,以及大量的文章和会议论文。最近,他作为技术编辑和主要撰稿人参加了《企业防御恶意软件的AVIEN指南(The AVIEN Guide to Malware Defense in the Enterprise)》的编写。这本书将在2007年由Syngress出版社出版。 联系地址:8 Clay Hill House, Wey Hill, Haslemere, SURREY GU27 1DA 电话:+44 7813 346129 网址:https://www.sodocs.net/doc/1c18405958.html, 安德鲁·李 安德鲁·李,国际信息系统安全协会会员,ESET公司的首席研究员。他是反病毒信息交换网络(Anti-Virus Information Exchange Network,AVIEN)和它的姊妹团体——AVIEN信息和早期预警系统(AVIEN Information & Early Warning System,AVIEWS)的创建者之一。他是亚洲反病毒研究协会(The Association of Anti-virus Asia Researchers,AVAR)的会员,也是WildList国际组织(一个负责收集流行性计算机病毒活体样本的组织)的记者。在加入ESET公司之前,他作为一名

硬盘的正确使用、维护及优化技巧

硬盘的正确使用、维护及优化技巧 文章来源:文章作者:发布时间:2006-03-24 字体: [大中小] 一、使用 硬盘是集精密机械、微电子电路、电磁转换为一体的电脑存储设备,它存储着电脑系统资源和重要 的信息及数据,这些因素使硬盘在PC机中成为最为重要的一个硬件设备。虽说名牌硬盘的无故障工作时间(MTBF)可超过2万个小时——按每天工作10小时计算其能正常使用5年以上,但如果使用不当的话,也是非常容易就会出现故障的,甚至出现物理性损坏,造成整个电脑系统不能正常工作。下面笔者就先 说一下如何正确地使用硬盘。 1、正确地开、关主机电源 当硬盘处于工作状态时(读或写盘时),尽量不要强行关闭主机电源。因为硬盘在读、写过程中如 果突然断电是很容易造成硬盘物理性损伤(仅指AT电源)或丢失各种数据的,尤其是正在进行高级格式化时更不要这么做——笔者的一位朋友在一次高格时发现速度很慢就认为是死机了,于是强行关闭了电源。再打开主机时,系统就根本发现不了这块硬盘了,后经查看发现“主引导扇区”的内容全部乱套, 最可怕的是无论使用什么办法也无法写入正确的内容了…… 另外,由于硬盘中有高速运转的机械部件,所以在关机后其高速运转的机械部件并不能马上停止运转,这时如果马上再打开电源的话,就很可能会毁坏硬盘。当然,这只是理论上的可能而已,笔者并没 有遇到过因此而损坏硬盘的事,但对于这样的事“宁可信其有,不可信其无”,还是保险至上!所以我 们尽量不要在关机后马上就开机,我们一定要等硬盘马达转动停稳后再次进行开机(关机半分钟后), 而且我们应尽量避免频繁地开、关电脑电源,因为硬盘每启动、停止一次,磁头就要在磁盘表面“起飞”和“着陆”一次,如果过于频繁地话就无疑增加了磁头和盘片磨损的机会。 2、硬盘在工作时一定要防震 虽然磁头与盘片间没有直接接触,但它们之间的距离的确是离得很近,而且磁头也是有一定重量的,所以如果出现过大的震动的话,磁头也会由于地心引力和产生的惯性而对盘片进行敲击。这种敲击无疑

SEO优化核心重点

SEO总纲核心要点 原创文章内容来源:从书本杂子或电子书上复制相应文章做成网站原创文章是原创文章来源的最好途径。 网站程序简化:网站上可有可无的功能和模块就一定要去掉,只要能满足用户的需求,程序越简单越好。 四处一词:何谓四处一词? 第一处:当前页面的标题上出现这个关键词; 就是单页面的title标签里面要放置你所做的关键词。 第二处:当前页面关键词标签、描述标签里出现这个关键词(如果是英文关键词,请在URL 里也出现); 关键词标签keyw,还有desc描述标签里面放关键词,如果是英文的,还需要在英文的链接里面合理放置关键词。 第三处:当前页面的内容里,多次出现这个关键词,并在第一次出现时,加粗; 文章中出现很多次“四处一词”的时候,那么对它进行加粗。 第四处:其他页面的锚文本里,出现这个关键词。 如果其他地方出现了这个词那么要加锚文本到这个词的链接。 站内定向锚文本: 大家都知道锚文本对于seo的重要性,一定程度上可以说做seo实际就是在做锚文本。 因为内链和外链建设都是用锚文本,内链提高用户体验、外链提高网站权重与流量导入。 那么什么是锚文本,例如青少年早恋就是一个锚文本,它的作用就是建立文本关键词与URL链接之间的关系。搜索引擎抓取页面时,对锚文本包含的文本关键词的读取,可以评估 此页面内容的属性,同时也知道这个链接对应的页面或是网站内容的属性。我们的网站上随 处都可以看见锚文本,例如分类目录上、文章内链、友情链接。而文章内链可以提高用户体验度和引导蜘蛛爬取全站、友情链接能引导流量和搜索引擎抓取,这些都是锚文本的功劳。只要我们将鼠标放到关键词上,

在流量器下方出现相应的网址,它就是锚文本。 实际上我们做的锚文本大多数都是定向锚文本。例如https://www.sodocs.net/doc/1c18405958.html,/ 这个URL 的关键词是青少年早恋,高中生早恋,孩子早恋,初中生早恋。那么在其他内容页面上给这 个URL做锚文本时采用了这四个关键词中的一个,这个锚文本就是定向锚文本。搜索引擎在抓取内容时,如果内容页面做了非常细致的定向锚文本,那么搜索引擎会很有可能判定这 篇内容为原创。精准的站内定向锚文本,对网站的整体权重提升有很大的帮助。 网站提交:我知道可以通过Google网站管理员工具向Google提交网站地图Sitemap , 那么我如何向百度、雅虎等其他搜索引擎提交呢?我是否需要制作一个类似Google Sitemap 一样的baidu Sitemap 呢? 答案:搜索引擎都遵循一样的Sitemap 协议,baidu Sitemap 内容和形式可以和Google Sitemap完全一样。但因为百度还没开通类似Google网站管理员工具的提交后台,所以,我们需要采用以下方式提交"baidu Sitemap"。 我们可以通过在robots.txt 文件中添加以下代码行来告诉搜索引擎Sitemap的存放位置。包 括XML Sitemap和文本形式的Sitemap 。 Sitemap:

最新可用eset nod32 24位激活码

最新可用eset nod32 24位激活码 M26H-0233-3VG8-4GA7-439R-DY6G M26A-0233-3V3P-CWWW-D4HQ-P7NT M26A-0233-3V3P-CWFS-46GH-KJHF M26G-0233-3WEC-9FQN-N7BW-C98N M26G-0233-3WEC-AX5Y-X6HT-43SS M26G-0233-3WEC-CF77-M6NH-6PNS M26G-0233-3WEJ-DGQF-436B-ULJ8 M26G-0233-3WEJ-EKVH-N3PW-Q77A M26G-0233-3WEJ-FMND-L4PQ-Q6ET M26A-0233-3UAH-XNGV-P4HX-J9YG M26A-0233-3U83-74TV-54RM-TGLU M26A-0233-3UAJ-JU95-F7CM-UGLX M26A-0233-3UB3-QL57-74QX-CN8G M26G-0233-3WE5-XGTY-P5FQ-SWEH M26G-0233-3WE5-XU8P-X4AT-5EY7 M26G-0233-3WE5-YALP-K5WP-EDCN M26G-0233-3WE5-YRLE-B6LM-7DWE M26G-0233-3WE6-3AN3-93SN-BJU8 M26G-0233-3WE6-3PQ4-M7CT-83LW M26G-0233-3WE6-45N9-N5TT-MHUS M26G-0233-3WEB-NEY9-V8JA-SL6J M26G-0233-3WEB-PXKV-E4SH-BW7M M26G-0233-3WEC-84JB-C8TE-998Y M26A-0233-3UB3-XQYF-A6YX-AFS7 M26A-0233-3UB4-4NVR-J6RC-NM9G M26A-0233-3UB4-5XX7-58MK-PB8C M26A-0233-3UB4-6X6F-Y5SC-6PDY M26A-0233-3UB4-7LR5-Y5WY-U97R M26G-0233-3WE5-THGM-R4VD-AF4X M26G-0233-3WE5-VX4P-B3NN-97DY M26G-0233-3WE5-WT5Y-38NN-PURA M26A-0233-3E6S-HAKD-H5QM-P3KG

相关文档

- 混凝土规范相关问题专家答疑整理版

- 公路工程造价专家答疑100问(二)

- 【问题征集通知】第二次专家答疑问题征集

- 专家答疑活动简报

- 专家答疑:考研翻译的评分标准及应考对策

- 公路工程造价专家答疑100问【最新】

- 会计专家答疑收集

- 2009定额定额解释及专家答疑

- 2011专家答疑第六期(建筑垃圾外运解释)

- 20kV及以下配电网工程定额专家答疑汇编

- 2016年华为软件大赛专家答疑记录

- 玉金方胶囊专家答疑

- 关于石棉检测的专家答疑

- 桥梁通专家答疑1

- 儿科专家答疑儿童常见问题

- 《四川省系列定额专家答疑》

- 公路工程造价专家答疑100问(二)

- 交通部定额站300问 造价专家答疑 完整

- 【通知】第一次专家答疑问题征集

- 公路工程造价专家答疑100-2