Fine Hand Segmentation using Convolutional Neural Networks

Fine Hand Segmentation using Convolutional Neural Networks

Tadej Vodopivec 1,2

Vincent Lepetit 1

Peter Peer 2

1

Institute for Computer Graphics and Vision,Graz University of Technology,Austria

2

Faculty of Computer and Information Science,University of Ljubljana,Veˇc na pot 113,1000Ljubljana,Slovenia

Abstract

We propose a method for extracting very accurate masks of hands in egocentric views.Our method is based on a novel Deep Learning architecture:In con-trast with current Deep Learning methods,we do not use upscaling layers applied to a low-dimensional rep-resentation of the input image.Instead,we extract features with convolutional layers and map them di-rectly to a segmentation mask with a fully connected layer.We show that this approach,when applied in a multi-scale fashion,is both accurate and e?cient enough for real-time.We demonstrate it on a new dataset made of images captured in various environ-ments,from the outdoors to o?ces.

1Introduction

To ensure that the user perceives the virtual objects as part of the real world in Augmented Reality ap-plications,these objects have to be inserted convinc-ingly enough.By far,most of the research in this di-rection has focus on 3D pose estimation,so that the object can be rendered at the right location in the user’s view [19,8,15].Other works aim at rendering the light interaction between the virtual objects and the real world consistently [5,14].

Signi?cantly less works have tackled the problem of correctly rendering the occlusions which occur when a real object is located in front of a virtual one.[9]provides a method that requires interaction with a human and works only for rigid objects.[17]relies on background subtraction but this is prone to fail when foreground and background have similar col-ors.Depth cameras bring now an easy solution to handling occlusions,however,they provide a poorly accurate 3D reconstruction of the occluding bound-aries of the real objects,which are essential for a con-vincing perception.The human perception is actu-ally very sensitive to small deviations from the actual locations in occlusion rendering,making the problem very challenging [21].

With the development of hardware such as the HoloLens,which provides precise 3D registration and crisp rendering of the virtual objects,egocentric Aug-mented Reality applications can be foreseen to be-come very popular in the near future.This is why we focus here on correct rendering of occlusions by the user’s hands of the virtual objects.More exactly,we assume that the hands are always in front of the vir-tual objects,which is realistic for many applications,and we aim at estimating a pixel-accurate mask of the hands in real-time.

The last years have seen the development of dif-ferent segmentation methods based on Convolutional Neural Networks [12,2].While our method also re-lies on Deep Learning,its architecture has several fundamental di?erences.It is partially inspired by Auto-Context [18]:Auto-Context is a segmentation method in which a segmenter is iterated,and the seg-mentation result of the previous step is used in the next iteration in addition to the original image.

The fundamental di?erence between our approach and the original Auto-Context is that the initial seg-mentation is performed on a downscaled version of the input image.The resulting segmentation is then upscaled before being passed to the second iteration.This allows us to take the context into account very e?ciently.We can also obtain precise localization of

1

a r X i v :1608.07454v 1 [c s .C V ] 26 A u g 2016

the segmentation boundaries,because we avoid using pooling.

In the remainder of the paper,we?rst discuss re-lated work,then describe our method,and?nally present and discuss our results on a new dataset for hand segmentation.

2Related Work

Hand segmentation is a very challenging task as hands can be very di?erent in shape and skin color, look very di?erent under another viewpoint,can be closed or open,can be partially occluded,can have di?erent positions of the?ngers,can be grasping ob-jects or other hands,etc.

Skin color is a very obvious cue

[1,7],unfortu-nately,this approach is prone to fail as other objects may have a similar color.Other approaches assume that the camera is static and segment the hands based on their movement[3],use a simple or even single-color background[10],or rely on depth information obtained by an RGB-D camera[6].None of these ap-proaches can provide accurate masks in general con-ditions.

The method we propose is based on convolutional neural networks[11].Deep Learning has already been applied to segmentation,and recent architec-tures tend to be made of two parts:The?rst part applies convolutional and pooling layers to the input image to produce a compact,low-resolution represen-tation;the second part applies deconvolutional layers to this representation to produce the?nal segmenta-tion,at the same resolution as the input image.This typically results in oversmoothed segments,which we avoid with our approach.

3Method

In this section,we describe our approach.We?rst present our initial architecture based on multiscale analysis of the input.We then split this architecture in two to obtain our more e?cient,?nal architecture. We?nally detail our methodology to select the meta-parameters of this architecture.

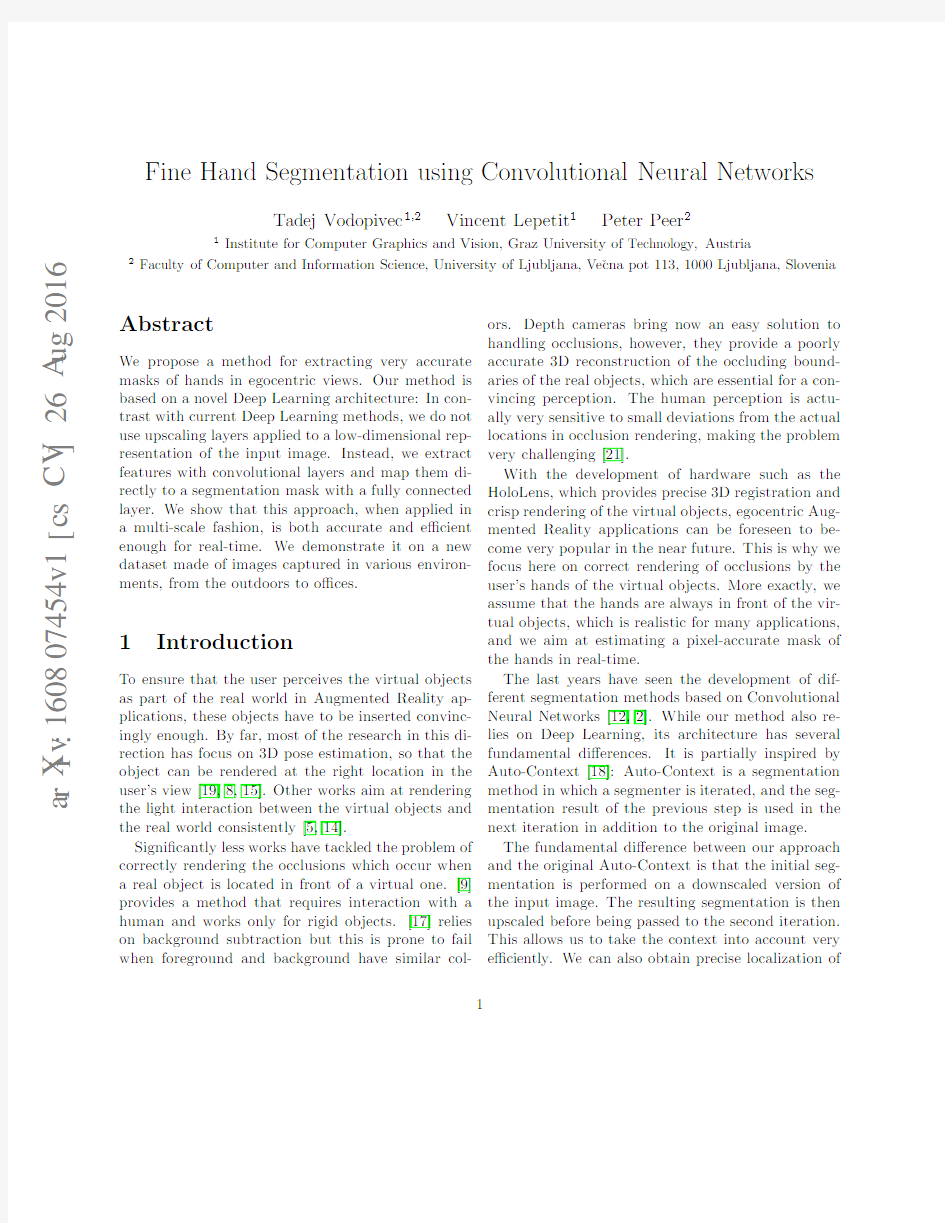

Figure1:The architecture for the two components of our network.We extract features with convolu-tional layers without using pooling layers and map them directly to the output segmentation with a fully connected layer.For clarity,we show only one convo-lutional layer,and both the number of feature maps n and the number of neurons in the fully connected layer m are underrepresented.

3.1Initial Network Architecture

As shown in Figure1,our initial network was made of three chains of three convolution layers each.The ?rst chain is directly applied to the input image,the second one to the input image after downscaling by a factor two,and the last one to the input image after downscaling by a factor four.We do not use pooling layers here,which allows us to extract the?ne details of the hand masks.

The outputs of these three chains are concatenated together and given as input to a fully connected lo-gistic regression layer,which outputs for each pixel its probability of lying on a hand.

3.2Splitting the Network in Two

The network described above turned out to be too computationally intensive for real-time.To speed it up,we developed an approach that is inspired by Auto-Context[18].Auto-Context is a segmentation method in which a segmenter is iterated,taking as input not only the image to segment but also the segmentation result of the previous iteration.The fundamental di?erence between our approach and the original Auto-Context is that the initial segmentation 2

Figure2:Two-part network architecture.As in Fig-ure1,only one convolutional layer is shown,and the number of feature maps and the number of neurons on the fully connected layer are underrepresented in both parts of the classi?er to make the representation more understandable.The second part of the net-work receives as input the output of the?rst part af-ter upscaling but also the original image,which helps segmenting?ne details.

is performed on a downscaled version of the input im-age.

As seen in Figure2,the?rst part performs the seg-mentation on the original image after downscaling by a factor16,and outputs a result of the same resolu-tion.Its output along with the original image is then used as input to the second part of the new network, which is a simpli?ed version of the initial network to produce the?nal,full-resolution segmentation.The two parts of the network have very similar structures. The di?erence is that the second part takes as in-put the original,full resolution input image together with the output of the?rst part after upscaling.The ?rst output already provides a?rst estimate of the position of the hands;the second part uses this in-formation in combination with the original image to e?ectively segment the image.An example of the fea-ture maps computed by the?rst part can be seen in Figure4.

The advantage of this split is two-fold:The?rst part runs on a small version of the original image, and we can considerably reduce the number of fea-ture maps and use smaller?lters in the second part without loosing accuracy.

3.3Meta-Parameters Selection

There is currently no good way to determine the opti-mal?lter sizes and numbers of feature maps,so these have to be guessed or determined by trial and error. For this reason we trained networks with the same structure,but di?erent parameters and compared the accuracy and running time.We?rst identi?ed pa-rameters that produced the best results while ignor-ing processing time and then simpli?ed the model to reduce processing time while retaining as much accu-racy as possible.

Our input images have a resolution of752×480, scaled to188×120,94×60,and47×30and input to the?rst part of the network.The?rst two layers of each chain output32feature maps and the third layer outputs16feature maps.We used?lters of size 3×3,5×5,and7×7pixels for the successive layers. For the second part,the?rst layer outputs8feature maps,the second layer4feature maps,and the third layer outputs the?nal probability map.We used3×3?lters for all layers.

We used the leaky recti?ed linear unit as activa-tion function[13].We minimize a boosted cross-entropy objective function[20].This function weights the samples with lower probabilities more.We used α=2as proposed in the original paper.We used RMSprop[4]for optimization.

To avoid over?tting and to make the classi?er more robust,we augmented the training set using very sim-ple geometric transformations:We used scaling by a random factor between0.9and1.1,rotating for up to10degrees,introducing shear for up to5degrees, and translating by up to20pixels.

4Results

In this section,we describe the dataset we built for training and testing our approach,and present and discuss the results of its evaluation.

4.1Dataset

We built a dataset of samples made of pairs of images and their correct segmentation performed manually. Figure5shows some examples.

3

Figure3:Original input image,its ground truth seg-mentation,and some of the resulting feature maps computed by the?rst part of the

network.

Figure4:Example of output of the?rst part from the ?nal architecture.Shades of grey represent the prob-abilities of the hand class over the pixel locations. We focused on egocentric images,i.e.the hands are seen from a?rst-person perspective.Several sub-jects acquired these images using a wide-angle camera mounted on their heads,near the eyes.The camera was set to take periodic images of whatever was in the ?eld of view at that time.In total348images were taken.90%of the images were used for training,and the rest was used for testing.

191of those images were taken in an o?ce at

6

Figure5:Some of the images from our dataset and their ground truth segmentations.

di?erent locations under di?erent lighting conditions. The remaining157images were taken in and around a residential building,while performing everyday tasks like walking around,opening doors etc.The images were taken with an IDS MT9V032C12STC sensor with resolution of752×480pixels.

4.2Evaluation

Figure6shows the ROC curve for our method applied to our egocentric dataset.When applying a threshold of50%to the probabilities estimated by our method, we achieve a99.3%accuracy on our test set,where the accuracy is de?ned as the percentage of pixels that are correctly classi?ed.Figure7shows that this accuracy can be obtained with thresholds from a large range of values,which shows the robustness of the method.Qualitative results can be seen in Figures8 and9.

4.3Meta-parameter Fine Selection

In total,we trained98networks for the reduced res-olution segmentation estimation and95networks for 4

(a)(b)(c)(d)

Figure9:Images and their segmentations.(a)Original image;(b)Upscaled segmentation predicted by the ?rst part of our network;(c)Final segmentation;(d)composition of the?nal segmentation into the original image.

the full resolution?nal classi?er.

After meta-parameter?ne selection for the?rst part of the network,we were able to achieve the ac-curacy of98.3%at16milliseconds per image,where the?rst layer had32feature maps and3×3?lter,the second layer32feature maps and5×5?lters,and the third layer had16feature maps and7×7?lters. With further meta-parameters?ne selection for the

second part of the network,we obtained a network reaching99.3%for a processing time of39millisec-onds.The?rst layer(of the second part)had8fea-ture maps,the second layer4feature maps,and the third layer1feature map.All layers used3×3?lters.

During this?ne selection process we noticed that the best results were achieved when the number of ?lters was higher for earlier layers and?lter sizes 5

Figure6:ROC curve obtained with our method on our challenging dataset of egocentric images.The?g-ure also shows a magni?cation of the top-left corner. were bigger at later layers.The reduced resolution segmentation estimation already provided very good results,but it still produced some false positives.Be-cause of the lower resolution the edges were not as smooth as desired.The full resolution?nal classi-?er was in most cases able to improve both the false positives and produce smoother edges.

4.4Evaluation of the Di?erent As-

pects of the Method

4.4.1Convolution on Full Resolution With-

out Pooling and Upscaling

To verify that splitting the classi?er into two parts performs better than a more standard classi?er,

we

Figure7:Accuracy of the classi?er depending on probability threshold.Best accuracy can be obtained for a large range of thresholding

values.

(a)(b)(c) Figure8:Comparison between the ground truth segmentations and the predicted ones.(a)Ground truth,(b)prediction,(c)di?erences.Errors are typ-ically very small,and1-pixel thin.

trained a classi?er to perform segmentation on full resolution images without?rst calculating the re-duced resolution segmentation estimation.

We used the same structure as the second part of 6

our classi?er and modi?ed it to only use the origi-nal image.To compensate the absence of input from the?rst part,we tried using more feature maps.The best trade-o?we found was using16feature maps and ?lter size of5×5pixels on each of the three layers—instead of3×3and8,4,and1feature maps.Be-cause of memory size limit on the used GPU,we were not able to train a more complex classi?er,which may produce better results.Nevertheless,processing time per image was185milliseconds with accuracy of94.0%,signi?cantly worse than the proposed ar-chitecture.

4.4.2Upscale Without the Original Image To verify that the second part of the classi?er bene-?ted from re-introducing the original image compared to only having results of the?rst part,we trained a classi?er like the one suggested in this work,but this time we provided the second part of the classi?er with only results of the?rst part.In this experiment pro-cessing time was36.7milliseconds,compared to39.2 milliseconds in the suggested classi?er and the accu-racy fell from99.3%to98.6%.Processing was there-fore faster,but the second part of the classi?er was not able to improve the accuracy much further.The second part of the classi?er was able to correct some false positives from the?rst part,but unable to im-prove accuracy along the edges between foreground and background.

4.5Comparison to a Color-based

Classi?cation

As discussed in the introduction,segmentation based on skin color is prone to fail as other parts of the image can have similar colors.To give a compari-son we applied the method described in[16]to our test set and obtained an accuracy of81%,which is signi?cantly worse than any other approach we tried. 5Conclusion

Occlusions are crucial for understanding the position of objects.In Augmented Realist applications,their

exact detection and correct rendering contribute to the feeling that an object is a part of the world around the user.We showed that starting with a low reso-lution processing of the image helps capturing the context of the image,and using the input image a second time helps capturing the?ne details of the foreground.

References

[1]Z.Al-Taira,R.Rahmat,M.Saripan,and P.Su-

laiman.Skin Segmentation Using YUV and

RGB Color Spaces.Journal of Information Pro-

cessing Systems,10(2):283–299,2014.

[2]V.Badrinarayanan,A.Kendall,and R.Cipolla.

SegNet:A Deep Convolutional Encoder-

Decoder Architecture for Image Segmentation.

arXiv Preprint,2015.

[3]L.Baraldi, F.Paci,G.Serra,L.Benini,and

R.Cucchiara.Gesture recognition in ego-centric

videos using dense trajectories and hand seg-

mentation.In Proceedings of the IEEE Confer-

ence on Computer Vision and Pattern Recogni-

tion Workshops,pages688–693,2014.

[4]Y.Dauphin,H.de Vries,J.Chung,and Y.Ben-

gio.RMSProp and Equilibrated Adaptive

Learning Rates for Non-Convex Optimization.

In arXiv,2015.

[5]P.Debevec.Rendering Synthetic Objects into

Real Scenes:Bridging Traditional and Image-

Based Graphics with Global Illumination and

High Dynamic Range Photography.In ACM

SIGGRAPH,July1998.

[6]Y.-J.Huang,M.Bolas,and E.Suma.Fusing

Depth,Color,and Skeleton Data for Enhanced

Real-Time Hand Segmentation.In Proceedings

of the First Symposium on Spatial User Interac-

tion,pages85–85,2013.

[7]M.Kawulok,J.Nalepa,and J.Kawulok.Skin

Detection and Segmentation in Color Images,

2015.

7

[8]G.Klein and D.Murray.Parallel Tracking and

Mapping for Small AR Workspaces.In ISMAR, 2007.

[9]V.Lepetit and M.Berger.A Semi Automatic

Method for Resolving Occlusions in Augmented Reality.In Conference on Computer Vision and Pattern Recognition,June2000.

[10]Y.Lew,R. A.Rhaman,K.S.Yeong,

A.Roslizah,and P.Veeraraghavan.A Hand Seg-

mentation Scheme using Clustering Technique in Homogeneous Background.In Student Confer-ence on Research and Development,2002. [11]J.Lon,E.Shelhamer,and T.Darrell.Fully Con-

volutional Networks for Semantic Segmentation.

In Proceedings of the IEEE Conference on Com-puter Vision and Pattern Recognition,2015. [12]J.Long,E.Shelhamer,and T.Darrell.Fully

Convolutional Networks for Semantic Segmen-tation.In Conference on Computer Vision and Pattern Recognition,2015.

[13]A.Maas, A.Hannun,and A.Ng.Recti?er

Nonlinearities Improve Neural Network Acous-tic Models.In Proceedings of the International Conference on Machine Learning Workshop on Deep Learning for Audio,Speech,and Language Processing,2013.

[14]M.Meilland,C.Barat,and https://www.sodocs.net/doc/0219032212.html,port.3D

high dynamic range dense visual slam and its ap-plication to real-time object re-lighting.In Inter-national Symposium on Mixed and Augmented Reality,pages143–152,2013.

[15]R.Newcombe,S.Izadi,O.Hilliges,

D.Molyneaux,D.Kim,A.J.Davison,P.Kohli,

J.Shotton,S.Hodges,and A.Fitzgibbon.

KinectFusion:Real-Time Dense Surface Map-ping and Tracking.In International Symposium on Mixed and Augmented Reality,2011.

[16]S.Phung,A.Bouzerdoum,and D.Chai.Skin

Segmentation using Color Pixel Classi?cation: Analysis and Comparison.IEEE Transactions

on Pattern Analysis and Machine Intelligence,

27(1):148–154,2005.

[17]J.Pilet,V.Lepetit,and P.Fua.Retexturing in

the Presence of Complex Illuminations and Oc-

clusions.In International Symposium on Mixed

and Augmented Reality,2007.

[18]Z.Tu and X.Bai.Auto-Context and Its Ap-

plications to High-Level Vision Tasks and3D

Brain Image Segmentation.IEEE Transactions

on Pattern Analysis and Machine Intelligence,

2009.

[19]L.Vacchetti,V.Lepetit,and P.Fua.Stable

Real-Time3D Tracking Using Online and Of-

?ine Information.PAMI,26(10),October2004.

[20]H.Zheng,J.Li, C.Weng,and C.Lee.Be-

yond Cross-Entropy:Towards Better Frame-

Level Objective Functions for Deep Neural Net-

work Training in Automatic Speech Recognition.

In Proceedings of the InterSpeech Conference,

2014.

[21]S.Zollmann, D.Kalkofen, E.Mendez,and

G.Reitmayr.Image-Based Ghostings for Sin-

gle Layer Occlusions in Augmented Reality.In

International Symposium on Mixed and Aug-

mented Reality,2010.

8